How Modern Ai And Computer Power Impact System Performance

How Modern AI and Computer Power Impact System Performance examines the profound influence of advanced AI on the speed, memory, and overall efficiency of computer systems. From processing speeds and memory management to data handling and energy consumption, this exploration delves into the multifaceted relationship between AI and system performance, highlighting the crucial role of hardware and software design in optimizing AI-driven tasks.

The discussion covers a wide range of topics, including how different processor types affect AI algorithm execution, the importance of memory capacity for complex models, the impact of data volume on performance, and the need for energy-efficient AI systems. Real-world examples illustrate the tangible benefits and challenges associated with integrating AI into various sectors.

Impact on Processing Speed

Modern AI systems rely heavily on the computational power of computers. Advances in computer architecture and processor design have significantly impacted the speed at which these systems can perform tasks, leading to more efficient and powerful AI solutions. This enhanced speed is crucial for real-world applications, from image recognition to natural language processing.

Impact of Computer Architecture

Computer architecture plays a pivotal role in influencing the processing speed of AI tasks. Improvements in chip design, including increased transistor density and more efficient circuit layouts, directly contribute to faster clock speeds and reduced latency. This translates to a marked increase in the speed at which computations can be executed. Specialized architectures, such as those designed specifically for AI workloads, further optimize performance.

Different Processor Types and Performance

Various processor types exhibit different strengths when it comes to running AI algorithms. Central Processing Units (CPUs) are general-purpose processors, but their performance in AI tasks can be limited compared to specialized processors. Graphics Processing Units (GPUs), designed for parallel processing, excel at handling the massive computations inherent in AI algorithms. Tensor Processing Units (TPUs) are purpose-built for machine learning tasks and often offer significantly faster inference times than other processors.

Field-Programmable Gate Arrays (FPGAs) can be configured to accelerate specific AI algorithms, providing a degree of customizability.

Role of Parallel Processing

Parallel processing is a cornerstone of modern AI systems. AI algorithms frequently involve many independent computations that can be performed concurrently. This parallelism allows AI systems to process large datasets and complex models at a much faster pace. Multi-core CPUs, GPUs, and TPUs are all designed to leverage parallel processing to accelerate AI tasks. The ability to perform computations simultaneously is crucial to handle the complexity of modern AI models.

Performance Comparison of AI Models

Different AI models exhibit varying performance characteristics across diverse processing speeds. Deep learning models, known for their complex architectures and massive datasets, often require significant computational resources and specialized hardware to achieve acceptable inference times. Machine learning models, generally simpler in structure, may perform adequately on less powerful hardware, though this depends on the specific model and its complexity.

The speed of a model depends on the amount of data it has been trained on and the complexity of the algorithm.

Impact of Hardware Improvements on Inference Time

Improvements in hardware directly translate to faster inference times for AI systems. Increased processing power, lower latency, and specialized architectures allow AI models to make predictions and decisions more rapidly. The resulting decrease in inference time is critical for real-time applications, enabling faster responses and more seamless interactions. This is particularly important in applications like autonomous vehicles or real-time image recognition.

Comparison Table of AI Models

| Model Type | Architecture | Average Processing Speed (milliseconds) | Required Hardware Specifications |

|---|---|---|---|

| Deep Learning (Convolutional Neural Network) | CNN | 10-500 | GPU with high CUDA cores, high memory bandwidth |

| Deep Learning (Recurrent Neural Network) | RNN | 20-1000 | GPU with high memory bandwidth, potential TPU for specific tasks |

| Machine Learning (Support Vector Machine) | SVM | 1-100 | CPU with multiple cores, high memory |

| Machine Learning (Decision Tree) | Decision Tree | 0.1-10 | CPU, relatively low memory requirements |

Memory Capacity and Management

Modern AI models, particularly deep learning architectures, often demand substantial memory resources. The complexity of these models, characterized by numerous layers and parameters, directly correlates with the memory requirements for training and execution. Efficient memory management is thus crucial for enabling the development and deployment of sophisticated AI systems.Memory capacity and management play a pivotal role in the performance of AI systems.

AI models, especially deep learning ones, need a substantial amount of memory for storing model weights, activations, and intermediate results during training and inference. Optimized memory management strategies directly impact training speed, model accuracy, and overall system performance.

Relationship Between Memory Capacity and AI Model Complexity

The complexity of an AI model is intrinsically linked to its memory requirements. More complex models, typically with deeper architectures and a larger number of parameters, demand proportionally more memory. For instance, a large language model with billions of parameters necessitates significantly more memory than a simpler model with a few million parameters. This relationship is crucial to consider when designing and deploying AI systems, as inadequate memory can lead to bottlenecks and reduced performance.

Methods for Optimizing Memory Usage in AI Systems

Several methods can be employed to optimize memory usage in AI systems. These include techniques like model quantization, where the precision of numerical values in the model is reduced to conserve memory. Another strategy is pruning, which involves removing less important connections or parameters from the model, thus reducing the model’s size and memory footprint. Furthermore, techniques such as gradient checkpointing can be used to reduce the amount of memory required for storing activations during backpropagation.

Examples of How Memory Limitations Affect AI Performance

Memory limitations can severely impact AI performance. For instance, training a large language model on a machine with insufficient RAM might lead to frequent swapping between RAM and disk, causing significant slowdowns and potentially affecting model accuracy. Similarly, the execution of an AI model on a device with limited memory could result in errors or the inability to process large input data.

In practice, this limitation might translate into a model’s inability to handle complex or extensive input data.

Impact of RAM and Storage Capacity on the Training and Execution of AI Models

Both RAM and storage capacity significantly affect the training and execution of AI models. Sufficient RAM is crucial for holding the model’s parameters, activations, and gradients during training and inference. Insufficient RAM can lead to performance degradation due to frequent swapping between RAM and storage, a process that is considerably slower. Storage capacity, on the other hand, is essential for storing large datasets, pre-trained models, and intermediate results during training.

The sheer volume of data required for training complex AI models necessitates substantial storage capacity.

Comparison of Memory Management Strategies

| Strategy | Efficiency Metrics | Resource Requirements | Examples of Applicable AI Models |

|---|---|---|---|

| Model Quantization | Reduces memory footprint significantly | Lower precision arithmetic | Image recognition models, natural language processing models |

| Model Pruning | Reduces model size and complexity | Potentially increased computational time for inference | Deep neural networks, convolutional neural networks |

| Gradient Checkpointing | Reduces memory consumption during backpropagation | Increased computational cost in some cases | Recurrent neural networks, generative adversarial networks |

| Memory Mapping | Efficiently manages large datasets | Requires appropriate file system support | AI models processing large image or video datasets |

Data Handling and Storage: How Modern AI And Computer Power Impact System Performance

Modern AI systems are fundamentally data-driven. The performance of these systems is inextricably linked to how efficiently they can handle and store the vast amounts of data required for training and deployment. Effective data management strategies are crucial for optimizing AI system performance and ensuring successful outcomes.Data volume and velocity, two key characteristics of modern datasets, significantly influence AI system performance.

Increasing data size necessitates more robust storage solutions, while faster data ingestion rates demand quicker processing capabilities. Consequently, optimized data handling and storage strategies are essential for scaling AI systems and maintaining performance levels as data complexity increases.

Influence of Data Volume and Velocity

The sheer volume of data generated daily, combined with its rapid rate of generation (velocity), presents a significant challenge for AI systems. Large datasets are necessary for training sophisticated AI models, but processing and storing them efficiently is critical. For example, social media platforms generate massive amounts of data daily, requiring specialized systems to manage and analyze it for tasks such as targeted advertising or sentiment analysis.

High data velocity, like sensor data streams from manufacturing plants, requires real-time processing capabilities to enable timely insights and actions.

Data Storage Techniques

Various data storage techniques are employed to optimize AI system performance. These techniques encompass diverse strategies, including cloud storage, distributed databases, and specialized AI-optimized storage solutions. These methods offer varying trade-offs in terms of cost, scalability, and security.

Comparison of Data Storage Methods

Cloud storage offers scalability and cost-effectiveness, particularly for large-scale AI projects. Distributed databases provide enhanced data management capabilities, enabling concurrent access and processing. Specialized AI storage solutions, designed specifically for AI workloads, often offer higher performance compared to general-purpose databases. The choice of method depends heavily on the specific AI task, the size of the dataset, and the budget constraints.

Impact of Data Preprocessing

Data preprocessing plays a pivotal role in preparing data for AI model training. This involves cleaning, transforming, and preparing data to enhance model performance and reduce errors. Techniques such as data cleaning, feature engineering, and normalization are crucial for producing high-quality training data, leading to more accurate and reliable AI models. For example, if an image dataset contains noisy or corrupted images, preprocessing steps could be used to remove or fix these issues, thus improving the model’s ability to learn from the data.

Data Storage Solutions Comparison

| Data Storage Solution | Advantages | Disadvantages | Cost | Scalability | Security |

|---|---|---|---|---|---|

| Cloud Storage (e.g., AWS S3, Azure Blob Storage) | Scalability, cost-effectiveness, accessibility | Potential latency issues, vendor lock-in | Variable, often pay-as-you-go | High | Good, with proper configuration |

| Distributed Databases (e.g., Apache Cassandra, MongoDB) | High availability, horizontal scalability, handling large datasets | Complexity in implementation, potential for data consistency issues | Variable, depending on setup | High | Good, with appropriate security measures |

| Specialized AI Storage (e.g., specialized hardware) | High performance, optimized for AI workloads | Higher initial cost, potentially less flexible | High | High | Good, often with built-in security features |

Energy Consumption and Efficiency

Modern AI systems, particularly deep learning models, demand significant computational resources, often leading to substantial energy consumption. This energy footprint raises concerns about the environmental impact of AI development and deployment. Understanding and mitigating this impact is crucial for the long-term sustainability of the field.The energy consumption of AI systems varies widely depending on factors like the specific model architecture, the size of the dataset, and the hardware platform used for training and inference.

Efficient energy management strategies are essential for reducing the environmental burden of these increasingly sophisticated systems.

Energy Demands of AI Models

The computational demands of training and running AI models can be substantial, particularly for large language models and image recognition systems. These models often involve complex matrix multiplications and billions of parameters, resulting in significant power consumption. For instance, training a large language model like GPT-3 can consume hundreds of megawatt-hours of electricity, which translates to a considerable carbon footprint.

This power consumption is often directly correlated with the model’s complexity and the size of the training dataset.

Methods for Reducing Energy Consumption

Several strategies can be employed to reduce the energy footprint of AI systems. These include optimizing model architectures for energy efficiency, using specialized hardware designed for AI workloads, and implementing efficient training algorithms. Techniques like model quantization, pruning, and knowledge distillation can significantly reduce the computational requirements without sacrificing performance. Furthermore, developing more energy-efficient hardware platforms is a key area of research.

Energy Efficiency of Different Hardware Platforms, How Modern AI and Computer Power Impact System Performance

Different hardware platforms exhibit varying degrees of energy efficiency when executing AI workloads. Graphics processing units (GPUs) are often favored for their parallel processing capabilities, but their energy consumption can be substantial. Field-programmable gate arrays (FPGAs) offer more flexibility and can potentially be tailored for specific AI tasks, leading to better energy efficiency in some cases. Specialized AI accelerators are also emerging, promising enhanced energy efficiency compared to general-purpose processors.

The choice of hardware platform depends on the specific AI task and the trade-off between performance and energy consumption.

Importance of Energy Efficiency in AI Sustainability

The environmental impact of AI systems is a growing concern. Reduced energy consumption is critical for long-term sustainability, not only from an environmental perspective, but also from an economic standpoint. Minimizing energy costs can make AI systems more accessible and affordable, enabling broader adoption across various industries. Furthermore, the development of sustainable AI practices contributes to a more responsible and environmentally conscious technology sector.

Table of AI System Architectures, Energy Consumption, and Environmental Impact

| AI System Architecture | Estimated Energy Consumption (kWh) | Estimated Environmental Impact (kg CO2e) |

|---|---|---|

| Convolutional Neural Network (Image Recognition) | 10-100 | 2-20 |

| Recurrent Neural Network (Natural Language Processing) | 50-500 | 10-100 |

| Transformer-based Model (Large Language Models) | 100-1000+ | 20-200+ |

Note: Values are estimates and can vary significantly based on specific model parameters, training data, and hardware configuration. Environmental impact calculations are based on average CO 2 emissions from electricity generation.

Impact on System Design and Architecture

Modern AI’s rapid advancement significantly alters computer system design. The demands of training and deploying sophisticated AI models are pushing the boundaries of traditional architectures, leading to the integration of specialized hardware and novel system configurations. This necessitates a shift in how we conceptualize and construct computer systems, from the fundamental components to the overarching system design.The rise of AI necessitates a profound re-evaluation of system design principles.

The traditional von Neumann architecture, with its sequential processing, struggles to keep pace with the computational demands of AI algorithms. This necessitates the development of more sophisticated architectures and the incorporation of specialized hardware to accelerate specific AI tasks.

Influence of AI on Computer System Design

AI algorithms, particularly deep learning models, require vast computational resources for training and inference. This necessitates modifications in the design of modern computer systems. Key changes include a move towards parallel processing architectures to handle the massive datasets and complex calculations involved. Additionally, the design process now prioritizes the integration of specialized hardware designed to excel at specific AI tasks.

Role of Specialized Hardware for AI Acceleration

Specialized hardware plays a crucial role in accelerating AI workloads. Graphics Processing Units (GPUs), originally designed for rendering graphics, have proven exceptionally well-suited for parallel computations required by AI algorithms. Field-Programmable Gate Arrays (FPGAs) offer even greater flexibility, allowing for customized hardware solutions tailored to specific AI models. This adaptability allows for optimal performance for particular tasks, exceeding the capabilities of general-purpose processors.

Comparison of Different AI System Architectures

Various system architectures are optimized for different AI tasks. A common example is the use of heterogeneous systems that combine CPUs for general-purpose tasks with GPUs for AI-specific computations. Other architectures employ dedicated AI accelerators, such as Tensor Processing Units (TPUs), which are designed to perform deep learning operations with exceptional efficiency. The selection of an architecture hinges on the specific requirements of the AI task, encompassing factors such as data volume, processing speed, and energy efficiency.

Examples of AI Changing Computer System Design

Cloud computing platforms are increasingly adopting specialized hardware and architectures optimized for AI workloads. This enables users to access powerful AI resources without needing to invest in expensive custom hardware. Furthermore, specialized AI chips are being developed by companies to meet the increasing demand for AI processing. These developments illustrate how AI is directly influencing the way we design and build computer systems.

AI System Architectures Table

| Architecture Type | Key Features | Performance Characteristics | Suitability for Specific AI Tasks |

|---|---|---|---|

| CPU-based | General-purpose processing, widely available | Moderate performance, lower energy efficiency | Suitable for simpler AI tasks, basic model training |

| GPU-based | Massive parallel processing, optimized for matrix operations | High performance, energy-intensive | Ideal for deep learning models, image recognition, and video processing |

| FPGA-based | Highly customizable hardware, adaptable to specific algorithms | Performance depends on customization, high energy efficiency possible | Suitable for specialized AI tasks, custom model acceleration |

| Heterogeneous (CPU+GPU) | Combines general-purpose and specialized processing | Balanced performance, moderate energy consumption | Suitable for diverse AI workloads, balancing general and AI-specific tasks |

| TPU-based | Dedicated hardware for deep learning operations | High performance, optimized energy efficiency | Excellent for large-scale deep learning models, complex AI tasks |

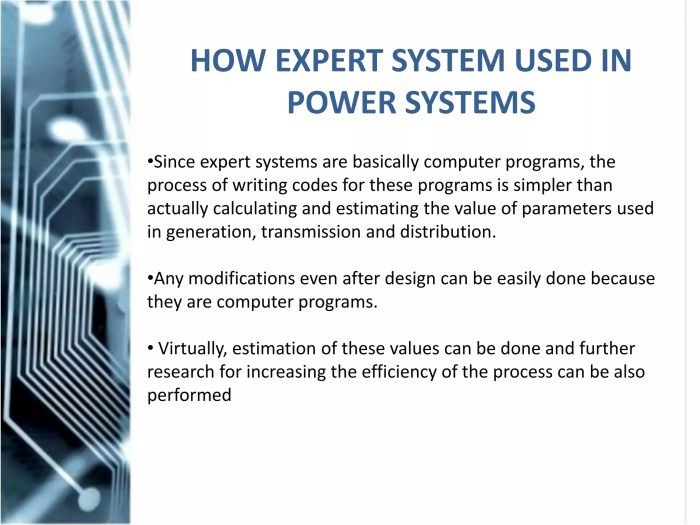

Real-World Applications and Impacts

Source: slidesharecdn.com

Modern AI and computing power are profoundly reshaping industries, driving innovation, and significantly impacting system performance. This transformation extends far beyond theoretical advancements, manifesting in tangible improvements across various sectors, from healthcare diagnostics to autonomous vehicles. The integration of AI and powerful systems is not merely about increased processing speed; it’s about enabling entirely new functionalities and capabilities.

Impact on Healthcare

AI-powered diagnostic tools are revolutionizing healthcare by enabling faster and more accurate diagnoses. Machine learning algorithms can analyze medical images (X-rays, CT scans, MRIs) to detect anomalies, potentially assisting radiologists in identifying diseases like cancer at earlier stages. This accelerates the treatment process, leading to improved patient outcomes. For example, AI-driven systems can analyze pathology slides to identify cancerous cells with high precision, assisting pathologists in faster and more consistent diagnoses.

These systems can also predict patient responses to treatment, potentially optimizing therapies and minimizing adverse effects.

Impact on Finance

In finance, AI is automating tasks such as fraud detection, risk assessment, and algorithmic trading. Sophisticated AI models can analyze vast datasets of financial transactions to identify patterns indicative of fraudulent activity. This allows financial institutions to proactively prevent fraud, protecting both the institution and its customers. AI-powered systems can also assess investment risks more accurately, enabling more informed investment decisions.

High-frequency trading algorithms leverage AI to make split-second trading decisions, which, while controversial, significantly impact market liquidity.

Impact on Transportation

Autonomous vehicles are a prime example of AI’s impact on transportation systems. Self-driving cars utilize sophisticated algorithms and sensor data to navigate roads, make decisions, and avoid obstacles. These systems require advanced computer vision and deep learning models, which, when implemented effectively, enhance safety and efficiency on the roads. The integration of AI into transportation systems also enables optimized traffic flow, potentially reducing congestion and travel times.

Furthermore, AI-powered systems can predict maintenance needs for vehicles, preventing breakdowns and improving overall system performance.

Challenges and Limitations in Scaling AI Systems

Scaling AI systems presents significant challenges. The sheer volume of data required to train sophisticated AI models can be overwhelming. Data must be meticulously curated, cleaned, and prepared, which can be a significant undertaking. Additionally, ensuring the ethical and unbiased nature of AI algorithms is crucial, requiring careful consideration of the data used for training. Maintaining the reliability and performance of these complex systems across diverse environments is another key challenge.

Real-World Examples of Enhanced System Performance

- Automated Customer Support Chatbots: Many companies utilize AI-powered chatbots to handle customer inquiries, providing immediate support and reducing response times. These chatbots can efficiently answer frequently asked questions, freeing up human agents to handle more complex issues. This results in faster response times and improved customer satisfaction, thereby increasing system performance in terms of efficiency.

- Predictive Maintenance in Manufacturing: AI algorithms can analyze sensor data from industrial equipment to predict potential malfunctions, allowing for proactive maintenance and preventing costly downtime. This example showcases AI’s capability to improve system performance by optimizing equipment lifespan and minimizing maintenance costs.

- Precision Agriculture: AI-driven systems can analyze data from sensors and satellites to optimize crop yields, minimize water usage, and improve overall agricultural efficiency. By precisely monitoring crops, AI can identify areas needing attention and apply resources more effectively. This has a demonstrably positive impact on agricultural productivity and efficiency.

Closing Summary

In conclusion, How Modern AI and Computer Power Impact System Performance reveals a dynamic interplay between AI advancements and system architecture. The increasing complexity of AI models necessitates specialized hardware and optimized software solutions to ensure efficient performance. Further research and development are crucial to harness the full potential of AI while addressing the challenges of scalability, energy consumption, and ethical considerations.

The future of computer systems hinges on the ongoing evolution of both AI and the hardware designed to support it.

Post Comment