Difference Between Cache Types L1 L2 L3

Difference between cache types L1 L2 L3 sets the stage for a fascinating exploration of computer architecture. Cache memory plays a crucial role in accelerating data access, with varying levels (L1, L2, and L3) each contributing to overall system performance. Understanding their unique characteristics and interactions is key to optimizing system design and performance.

This discussion delves into the specifics of each cache level, exploring their location, size, speed, and impact on overall system performance. We’ll examine how these levels interact within the memory hierarchy, comparing their access speeds and capacities. Further, we’ll analyze how cache mapping and replacement policies affect overall efficiency. Understanding cache misses and hit rates, and optimizing cache usage will also be crucial to the discussion.

Introduction to Cache Memory

Source: techspot.com

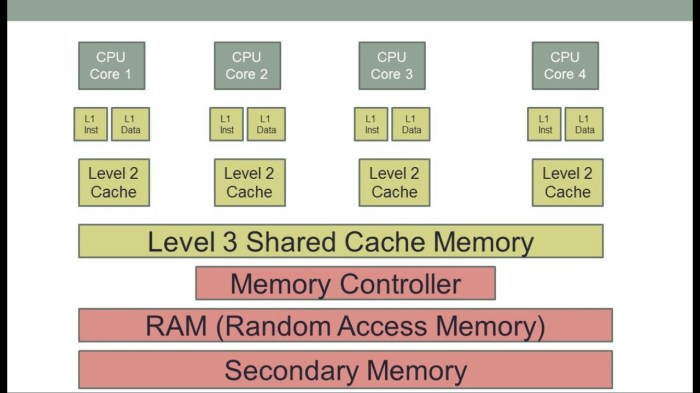

Cache memory is a crucial component in modern computer architecture, acting as a high-speed intermediary between the processor and the main memory (RAM). Its primary function is to store frequently accessed data and instructions, significantly accelerating the overall performance of the system. This rapid access is achieved by maintaining a copy of data that the processor is likely to need next.The fundamental concept behind cache memory is a hierarchy, where multiple levels of caches (L1, L2, L3) exist, each with varying characteristics in terms of size, speed, and access latency.

This hierarchical structure allows for a balance between speed and capacity. The levels closer to the processor (L1) are faster but smaller, while those further away (L3) are slower but larger, thus optimizing the system’s overall performance.

Cache Hierarchy

The cache hierarchy is a crucial aspect of computer architecture, allowing for optimized data access. The closer a cache level is to the processor, the faster it is, enabling quicker access to data. However, this speed comes at the cost of size. The levels further from the processor, though slower to access, can store more data. This trade-off is crucial in achieving a balance between speed and capacity.

Cache Levels

Cache levels are structured in a hierarchy, with L1 being the fastest and closest to the processor, and L3 being the slowest but largest. This tiered approach allows for a balanced system performance, as the higher levels are responsible for quick access to frequently used data, while the lower levels handle the larger volume of data that’s less frequently accessed.

Cache Characteristics Comparison

This table Artikels the general characteristics of different cache levels.

| Cache Level | Size | Speed | Access Latency |

|---|---|---|---|

| L1 | Small (typically tens of kilobytes) | Very fast (measured in nanoseconds) | Extremely low (few nanoseconds) |

| L2 | Medium (typically hundreds of kilobytes to several megabytes) | Fast (measured in tens of nanoseconds) | Lower than L1, but higher than L3 (tens to hundreds of nanoseconds) |

| L3 | Large (typically several megabytes to tens of megabytes) | Slower than L1 and L2 (measured in hundreds of nanoseconds) | Highest among the three (hundreds of nanoseconds) |

This comparison highlights the trade-off between speed and capacity. Smaller, faster caches (L1) are crucial for quick access to frequently used data, while larger, slower caches (L3) are essential for handling the broader range of data needed by the system. The specific sizes and speeds can vary significantly between different processor architectures.

Level 1 Cache (L1 Cache)

Source: thetechedvocate.org

The L1 cache is the fastest and smallest level of cache memory in a computer system. Its proximity to the processor is crucial for optimal performance, as it allows for extremely rapid data retrieval. This direct access dramatically reduces the time it takes to fetch instructions and data, significantly speeding up overall processing.The L1 cache is an integral part of the processor’s architecture, playing a vital role in the system’s responsiveness.

Its high-speed access is essential for maintaining the processor’s efficiency, and its small size is a consequence of the technological constraints in fabricating high-speed memory.

Location in Relation to the Processor

The L1 cache is integrated directly onto the processor chip. This physical proximity minimizes the distance data needs to travel, drastically reducing latency compared to accessing slower external memory. This close integration ensures that the processor has immediate access to frequently used data and instructions.

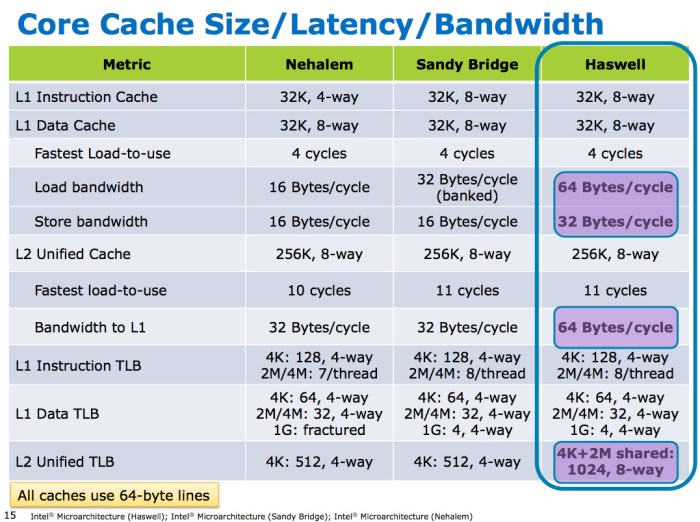

Architecture of L1 Cache

The architecture of the L1 cache is meticulously designed for speed. It’s typically split into two independent caches: an instruction cache and a data cache. The instruction cache stores program instructions, and the data cache stores the data needed by the instructions. This separation allows for simultaneous access to instructions and data, maximizing efficiency. Specialized hardware manages the transfer of data between the processor and the L1 cache, ensuring optimal throughput.

Furthermore, advanced techniques like caching algorithms (e.g., least recently used (LRU)) and memory access patterns are employed to optimize data placement and retrieval within the L1 cache.

Speed Comparison

L1 cache boasts significantly faster access times than other levels of cache (L2, L3) and main memory. The extremely short access latency of L1 cache is essential for achieving high processing speeds. This speed advantage stems from the cache’s physical proximity to the processor core, as well as its specialized hardware and optimized architecture. Access times for L1 cache are typically measured in nanoseconds, making it considerably faster than L2 and L3 caches, which are measured in tens of nanoseconds, and main memory, which is measured in hundreds of nanoseconds.

Benefits of Small, Fast L1 Cache

The small size of L1 cache, while seemingly a drawback, offers several crucial benefits. The compactness of the L1 cache allows for the implementation of extremely high-speed memory technologies, leading to faster access times. Minimizing the size of the cache and maximizing the speed contributes to reduced power consumption and heat generation. This translates to more efficient and sustainable computer systems.

By keeping the most frequently used data readily available, the small size of the L1 cache is directly proportional to the processor’s efficiency and overall performance.

Types of L1 Cache

- Instruction Cache: This cache stores the program instructions that the processor needs to execute. Storing instructions in a dedicated cache enables the processor to fetch them rapidly, avoiding the delays associated with accessing main memory.

- Data Cache: This cache stores the data required by the program instructions. Separate data and instruction caches enable concurrent access to both instructions and data, maximizing the processor’s performance.

Impact of L1 Cache Size on System Performance

The size of the L1 cache directly impacts overall system performance. Larger L1 caches can hold more frequently used data and instructions, reducing the need to access slower levels of memory. A larger L1 cache can lead to a significant performance improvement, especially for applications that involve intensive computations and frequent data accesses. However, increasing the L1 cache size often comes at the cost of increased chip area and manufacturing complexity.

This can result in higher production costs. Furthermore, the marginal performance gain for larger L1 caches may not always justify the added complexity.

L1 Cache Size and Performance Example

A computer system with a 32KB L1 cache might perform better than one with a 16KB L1 cache for tasks involving frequent data accesses. This difference is noticeable in programs that extensively use loops or arrays. Real-world examples are evident in video editing software or scientific simulations.

Level 2 Cache (L2 Cache)

Source: anandtech.com

The L2 cache sits between the processor and the considerably larger, slower main memory. It acts as a buffer, storing frequently accessed data and instructions that the processor needs quickly. This intermediary level of cache significantly improves overall system performance by reducing the time it takes to retrieve data from main memory.

Placement Relative to Processor and L1 Cache

The L2 cache is typically located physically closer to the processor core than the L1 cache, although not as close as the L1 cache. This proximity, while not as immediate as the L1 cache, still reduces the time required to access data compared to accessing main memory. This strategic placement enables faster data transfer between the processor and L2 cache.

Size and Speed Characteristics

L2 cache sizes vary considerably across different processor models, ranging from a few megabytes to tens of megabytes. Larger L2 caches can hold more frequently accessed data, reducing the need to fetch data from slower main memory. Speed is also a critical factor, with L2 cache speeds often slightly slower than L1 cache but significantly faster than main memory.

Modern processors often employ advanced techniques to optimize both size and speed of L2 cache.

Access Time Comparison

Access time for L2 cache is generally slower than that of L1 cache. However, the difference is typically less pronounced than the difference between L1 cache and main memory access. This difference in access time is a key factor influencing the overall performance of the system. The slower access time of L2 compared to L1 is compensated for by its higher capacity and strategic placement.

Role in Reducing Memory Access Latency

L2 cache plays a crucial role in minimizing memory access latency. By storing frequently used data, the processor can access this data much faster from the L2 cache than from main memory. This reduced latency translates into faster execution of instructions and overall system responsiveness. The reduced memory access latency leads to a smoother user experience.

Example of Critical Performance Scenario

Consider a video editing application. When editing video clips, the application frequently accesses the video data stored in main memory. If the video data isn’t readily available in the L1 or L2 cache, the processor must fetch it from main memory, which can cause noticeable slowdowns. The L2 cache is critical in this scenario, as it can store frequently accessed video data, reducing the time required to fetch it from main memory, leading to a more responsive and efficient video editing process.

Interaction with L1 Cache

Data flows between L1 and L2 cache based on the processor’s demand. If the processor needs data not present in L1 cache, it checks the L2 cache. If the data is found in L2, it’s transferred to L1 cache for faster access. If not found in either, the processor accesses main memory. This hierarchical structure ensures that the most frequently used data is readily available with minimal latency.

Level 3 Cache (L3 Cache)

The L3 cache is a crucial component in modern computer architecture, acting as a buffer between the processor and the main memory. Its role is to store frequently accessed data and instructions, enabling faster retrieval compared to accessing the slower main memory. This layered approach of caching significantly boosts system performance.

Location and Relationship to Other Caches

The L3 cache sits on the chip, but outside of the L1 and L2 caches. This physical separation, while seemingly simple, is critical for managing data flow and optimizing performance. It’s situated between the processor core and the L2 cache, and in some cases, directly connected to the L2 cache to ensure rapid data exchange. This intermediate position allows the processor to access data from the L3 cache more quickly than directly from main memory, thus improving overall system responsiveness.

Size and Speed of L3 Cache

The size of the L3 cache significantly impacts performance. Larger caches can store more data, increasing the likelihood of finding the required information within the cache. Modern L3 caches range from several megabytes to tens of megabytes. This considerable capacity is crucial for handling large datasets, complex computations, and demanding applications. While size is important, the speed of access to data within the L3 cache is equally vital.

L3 cache access times are generally slower than L1 and L2 caches, but still much faster than main memory.

Impact on Overall System Performance

The L3 cache plays a significant role in enhancing overall system performance. By storing frequently accessed data, it reduces the need to access slower memory, thereby minimizing latency and improving responsiveness. This is especially noticeable in applications requiring frequent data retrieval, such as database systems, video editing software, and large-scale simulations. A larger L3 cache will typically result in a more responsive system and enhanced application performance.

Access Speed Comparison

L3 cache access times are typically slower than L1 and L2 caches due to its physical separation from the processor core. This slower speed, however, is still significantly faster than directly accessing main memory. The trade-off between speed and capacity is a key design consideration in optimizing cache performance. Access times vary based on the specific cache architecture and implementation details, but generally, L3 cache access is noticeably faster than main memory access.

Typical Size Ranges

The size of L3 cache varies depending on the processor model and manufacturer. Here’s a table illustrating typical size ranges for L3 caches in modern processors:

| Processor Type | Typical L3 Cache Size Range (MB) |

|---|---|

| High-end Desktop | 16-64+ |

| Mid-range Desktop | 8-32 |

| High-end Mobile | 6-16 |

| Low-end Mobile | 2-8 |

Data Organization Methods

Several methods are used to organize data within the L3 cache to optimize retrieval speed. These methods include set-associative mapping, which groups cache lines into sets, and direct mapping, which maps each memory address to a specific cache line. Other techniques like fully associative mapping allow any memory location to be stored in any cache line. The chosen method directly affects the efficiency of cache lookups and overall system performance.

Different organizations accommodate various use cases, each with its strengths and weaknesses in terms of speed and capacity utilization.

Comparison of L1, L2, and L3 Caches

Cache memory plays a crucial role in modern computer systems, significantly impacting performance by storing frequently accessed data closer to the processor. Understanding the differences between the various cache levels – L1, L2, and L3 – is essential for optimizing system design and achieving optimal speed. This comparison highlights the trade-offs between speed and capacity at each level, offering insight into their roles in the overall memory hierarchy.The primary distinction between the different cache levels lies in their proximity to the processor and, consequently, their access speed and capacity.

The closer a cache level is to the processor, the faster it is to access, but typically at the cost of reduced storage capacity. This fundamental principle governs the design and placement of caches within the memory hierarchy.

Access Speeds of L1, L2, and L3 Caches

The access speed of cache levels directly correlates with their physical proximity to the processor. L1 cache, being the closest, boasts the fastest access times, typically measured in nanoseconds. L2 cache, positioned further away, exhibits a slightly slower access time. L3 cache, the furthest, has the slowest access speed among the three. These variations in access speed are a direct consequence of the physical distance and the associated circuitry involved in retrieving data.

Size Differences among L1, L2, and L3 Caches

The size of each cache level is inversely proportional to its access speed. L1 cache, due to its high speed requirements, typically has the smallest capacity. L2 cache possesses a larger capacity compared to L1, while L3 cache, being the furthest from the processor, has the largest capacity. These varying capacities represent a trade-off between speed and the amount of data that can be stored.

A larger cache holds more data, reducing the frequency of data retrieval from slower memory levels.

Placement of L1, L2, and L3 Caches in the Memory Hierarchy

The placement of caches within the memory hierarchy follows a specific order dictated by proximity to the processor. L1 cache sits closest to the CPU, acting as the fastest, but smallest, storage for frequently accessed data. L2 cache is positioned further out, offering a larger storage space for data that is used less frequently but more often than data in L3.

Finally, L3 cache, the outermost level, provides the largest storage capacity, acting as a buffer between main memory and the processor. This hierarchical structure minimizes the need for frequent accesses to slower memory levels, significantly enhancing overall system performance.

Trade-offs between Speed and Capacity for Each Level

The design of each cache level presents a trade-off between access speed and storage capacity. L1 cache, optimized for speed, has a relatively small capacity. This design allows for extremely fast access to frequently used data, but requires frequent accesses to slower memory levels for less frequently accessed data. L2 cache offers a balance between speed and capacity, providing a larger storage space for more data while maintaining a speed advantage over L3.

L3 cache, due to its larger capacity, provides a significant buffer for data not readily available in L1 or L2, but at the cost of increased access time compared to the other levels.

Table Illustrating Access Time, Capacity, and Placement

| Cache Level | Access Time (ns) | Capacity (KB/MB) | Placement |

|---|---|---|---|

| L1 | 0.5-1 | 32-256 KB | Closest to the CPU core |

| L2 | 5-10 | 256 KB-8 MB | Further from the CPU core |

| L3 | 10-30 | 4-64 MB | Shared by multiple CPU cores |

Impact of Cache Coherency Protocols on Performance

Cache coherency protocols are essential for maintaining data consistency across multiple caches within a multi-core system. These protocols ensure that all caches hold the most up-to-date values of shared data, preventing data corruption and ensuring reliable operation. Efficient cache coherency protocols are critical for maintaining data integrity and performance in multi-core environments.

Cache Mapping and Replacement Policies

Cache mapping and replacement policies are crucial aspects of cache memory design. These strategies determine how data is stored and retrieved from the cache, impacting overall system performance. Efficient mapping minimizes conflicts, while effective replacement policies ensure that frequently accessed data remains in the cache, reducing the need for slower main memory access.Cache mapping strategies determine how data from main memory is stored in the cache, and replacement policies decide which data to remove from the cache when it becomes full.

The choice of both strategies directly affects cache performance. Different strategies have varying trade-offs in terms of complexity, performance, and cost.

Cache Mapping Strategies

Cache mapping strategies dictate how data blocks from main memory are placed into cache memory locations. Proper mapping minimizes conflicts and improves hit rates. Three primary strategies exist: direct-mapped, associative, and set-associative.

- Direct-mapped: Each memory block has a unique cache location. This simplifies hardware, but it can lead to conflicts if multiple memory blocks map to the same cache location, causing thrashing. This can significantly reduce performance.

- Associative: Any memory block can be placed in any cache location. This maximizes flexibility but requires complex hardware for searching the cache. The hardware overhead for searching all possible locations in the cache can be substantial, potentially impacting overall performance.

- Set-associative: A compromise between direct-mapped and associative mapping. Memory blocks are assigned to specific sets within the cache, and any block within a set can occupy any location within that set. This reduces conflicts and improves performance compared to direct-mapped while retaining lower complexity than fully associative mapping.

Cache Replacement Policies

Cache replacement policies determine which data blocks to remove from the cache when a new block needs to be loaded. The choice of policy significantly impacts cache performance, as different policies prioritize different access patterns. Common replacement policies include FIFO, LRU, and random.

- FIFO (First-In, First-Out): The block that has been in the cache the longest is replaced. This is simple to implement but may not be optimal for frequently accessed data. If a block is frequently accessed, it might be replaced early, requiring repeated loading from main memory, thus potentially reducing performance.

- LRU (Least Recently Used): The block that hasn’t been used for the longest time is replaced. This policy attempts to keep frequently accessed data in the cache, which generally leads to better performance compared to FIFO. The hardware overhead for tracking recent usage can increase complexity.

- Random: A block is selected randomly for replacement. This is the simplest policy to implement, but it generally performs worse than FIFO or LRU. The lack of a clear strategy for choosing which block to replace results in suboptimal cache utilization and reduced performance.

Comparison of Mapping and Replacement Strategies

The following table summarizes the key characteristics of the discussed cache mapping and replacement strategies:

| Strategy | Mapping | Replacement | Advantages | Disadvantages |

|---|---|---|---|---|

| Direct-mapped | Simple | Simple | Low hardware cost | High conflict rate, poor performance with non-sequential access patterns |

| Associative | Flexible | Complex | High hit rate, good performance | High hardware cost, complex implementation |

| Set-associative | Compromise | Compromise | Good balance between cost and performance, moderate conflict rate | Moderate hardware cost, moderate implementation complexity |

| FIFO | N/A | Simple | Easy to implement | Poor performance for frequently accessed data |

| LRU | N/A | Complex | Good performance for frequently accessed data | Higher hardware overhead |

| Random | N/A | Simplest | Easy to implement | Generally poor performance |

Impact on Cache Performance

The choice of mapping and replacement policies directly influences cache performance. Direct-mapped caching with FIFO replacement, for instance, is suitable for applications with predictable access patterns. Associative mapping with LRU replacement, on the other hand, is often favored for applications with more unpredictable access patterns. Set-associative caching with LRU replacement provides a good balance between performance and cost.

The selection of the appropriate mapping and replacement policy is critical for optimal system performance.

Cache Misses and Hit Rates

Cache misses and hit rates are crucial performance indicators in computer systems. Understanding these metrics allows for effective optimization of memory access and system speed. A high cache hit rate indicates that data is readily available from the cache, leading to faster processing. Conversely, a high cache miss rate signifies that data needs to be retrieved from slower memory levels, impacting performance.A cache hit occurs when the requested data is found in the cache, while a cache miss signifies that the data is not present.

The proportion of hits to total requests determines the cache hit rate, a critical factor in system performance. Different types of cache misses exist, each impacting performance in unique ways. Understanding these miss types and their causes is essential for optimizing cache usage and improving overall system speed.

Cache Hit and Miss Definitions

Cache hits occur when the requested data resides in the cache. This results in a faster retrieval compared to accessing the data in slower memory levels, such as main memory. Cache misses occur when the requested data is not found in the cache, necessitating a retrieval from a slower memory level. This delay in data retrieval directly impacts system performance.

Types of Cache Misses

Various types of cache misses contribute to overall performance. Understanding these differences helps in targeting specific optimization strategies.

- Compulsory (Cold) Misses: These misses occur when a block of data is first accessed. The cache is initially empty, or the block hasn’t been loaded into the cache yet. This is an unavoidable type of miss as the data must be fetched from a lower level of memory. An example includes the first access to a newly loaded program or data file.

- Capacity Misses: These misses arise when the cache cannot hold all the data required during a particular program’s execution. Even if the program has previously accessed the data, if it exceeds the cache’s capacity, retrieving it from a lower memory level becomes necessary. This frequently happens with large datasets or programs that utilize many different data blocks.

- Conflict Misses: These misses occur when multiple data blocks map to the same cache location. This happens due to the cache’s organization. Although the data might have been previously accessed and stored in the cache, if it’s overwritten by another block that maps to the same location, retrieving it involves a miss. This is particularly relevant in set-associative caches.

Relationship Between Hit Rates and Performance

Cache hit rates directly correlate with system performance. A higher hit rate generally translates to faster access times and better overall system speed. This is because data retrieval from the cache is significantly quicker than from main memory or secondary storage.

Scenarios Leading to Cache Misses

Several scenarios can lead to cache misses, impacting performance.

- Frequent Data Changes: If data is constantly modified, the cache might quickly become outdated, leading to frequent misses as the cached data becomes inaccurate.

- Large Datasets: When working with exceptionally large datasets, the cache’s capacity might not suffice to store all necessary data, resulting in frequent capacity misses.

- Program Behavior: The specific way a program accesses data significantly impacts cache performance. Sequential access often leads to better hit rates, while random access patterns can result in more misses.

Methods to Improve Cache Hit Rates

Various methods can be implemented to improve cache hit rates and, consequently, system performance.

- Optimizing Data Structures and Algorithms: Strategies to enhance the program’s efficiency, including optimizing data structures and algorithms to minimize data access, can improve cache utilization.

- Improving Cache Size: Increasing the cache’s size allows it to store more data, reducing the likelihood of capacity misses.

- Choosing Appropriate Cache Mapping Policies: Implementing suitable cache mapping policies can minimize conflict misses and improve data retrieval efficiency.

- Utilizing Prefetching Techniques: Employing prefetching strategies enables the system to anticipate future data accesses, loading data into the cache before it’s needed, thereby reducing the occurrence of compulsory and capacity misses.

Importance of Optimizing Cache Usage, Difference between cache types L1 L2 L3

Optimizing cache usage is vital for enhancing system performance. Minimizing cache misses translates to reduced latency and improved responsiveness. This translates to better user experiences, especially in applications requiring quick data access, such as web browsing or game playing.

Cache Performance Considerations

Cache performance is a critical aspect of system design, directly impacting the speed and responsiveness of applications. Optimizing cache usage can significantly enhance overall system performance, allowing for faster execution of tasks and improved user experience. Understanding the factors that influence cache performance is crucial for developing efficient and high-performing systems.

Factors Influencing Cache Performance

Several factors contribute to the overall performance of a cache. These include the cache’s size, the architecture of the cache (e.g., associativity, mapping policies), and the specific access patterns of the programs running on the system. Data access patterns significantly impact cache hit rates, which directly correlate to overall performance. Understanding these relationships is key to achieving optimal performance.

Impact of Data Access Patterns on Cache Hit Rates

Data access patterns play a significant role in determining cache hit rates. Sequential access, where data elements are accessed in order, often leads to higher hit rates as data elements are likely to reside in the cache. Conversely, random or non-sequential access patterns can result in a higher rate of cache misses, as the required data may not be present in the cache.

For instance, traversing an array in order typically results in higher cache hits compared to accessing elements randomly within the array. This difference in access patterns directly impacts the effectiveness of the cache in serving data requests.

Importance of Memory Access Optimization

Memory access optimization is paramount for achieving high cache hit rates. By arranging data in memory in a way that favors sequential access, programmers can improve the performance of their applications. Techniques such as loop unrolling, data alignment, and the use of cache-friendly algorithms are important considerations for optimizing memory access. Properly aligned data, often organized in a manner that aligns with cache line sizes, reduces cache misses and boosts overall performance.

Strategies for Improving Overall System Performance

Several strategies can improve overall system performance by optimizing cache usage. One key strategy is to employ techniques that reduce cache misses, such as prefetching data, using cache-aware algorithms, and ensuring data alignment. Using cache-aware data structures can minimize cache misses and improve the efficiency of data retrieval. Another crucial strategy is to reduce the number of memory accesses altogether.

For example, avoiding unnecessary computations or using efficient algorithms that minimize memory usage can lead to significant performance gains.

Relationship Between Cache Size and Overall System Performance

The size of the cache significantly affects overall system performance. Larger caches generally lead to higher hit rates, reducing the need for slower main memory accesses. However, increasing cache size also incurs a cost in terms of area and power consumption. A balance must be struck between cache size and cost. In practice, the optimal cache size depends on the specific workload and application characteristics.

For example, applications with large working sets often benefit from larger caches.

Common Performance Optimization Techniques

| Optimization Technique | Description |

|---|---|

| Loop Unrolling | Repeating loop iterations to reduce loop overhead and improve cache locality. |

| Data Alignment | Ensuring data is aligned with cache line sizes to reduce cache misses. |

| Prefetching | Anticipating data needs and fetching them into the cache before they are required. |

| Cache-Aware Algorithms | Employing algorithms that consider cache characteristics to optimize data access patterns. |

| Reducing Memory Accesses | Minimizing the number of memory accesses to reduce cache misses and improve overall system efficiency. |

Outcome Summary: Difference Between Cache Types L1 L2 L3

In summary, the difference between L1, L2, and L3 caches boils down to a trade-off between speed and capacity. L1 caches, closest to the processor, offer the fastest access but are the smallest. L2 caches provide a larger capacity, and L3 caches, further away, offer the largest storage but have the longest access times. Understanding these differences, and how cache mapping and replacement policies affect performance, is vital for optimizing system design and achieving maximum performance.

Post Comment