Edge Computing Revolution In Data Processing Methods

Edge computing revolution in data processing methods is transforming how we handle data, moving processing closer to the source. This shift from traditional cloud-based systems brings significant advantages in speed, latency, and security. Different data processing methods, like real-time analysis and batch processing, are employed at the edge, each with unique strengths and weaknesses. This approach offers solutions for managing the massive volume and velocity of data generated by connected devices, while also presenting security challenges that need careful consideration.

The revolution is being driven by the need for faster response times, improved data privacy, and optimized resource utilization. Applications across various industries are leveraging this technology, from industrial automation to healthcare. Understanding the infrastructure, deployment strategies, and integration with other technologies like AI and IoT is crucial for successful implementation.

Introduction to Edge Computing: Edge Computing Revolution In Data Processing Methods

Edge computing represents a paradigm shift in data processing, moving away from centralized cloud-based systems towards distributed processing at the network edge. This distributed approach offers significant advantages in terms of latency, bandwidth, and security, particularly for applications demanding real-time responses and sensitive data handling. This shift is driven by the increasing volume and velocity of data generated by diverse sources, making centralized processing increasingly inefficient and challenging.The fundamental distinction between edge computing and traditional cloud computing lies in the location of data processing.

Traditional cloud computing relies on sending data to a centralized data center for processing, while edge computing performs the processing closer to the source of the data. This proximity significantly reduces latency and dependence on network connectivity, enabling faster and more reliable responses, especially in applications like autonomous vehicles, industrial automation, and IoT sensor networks. This localized processing is crucial for real-time decision-making and responsiveness.

Key Characteristics of Edge Computing

Edge computing possesses several key characteristics that differentiate it from traditional cloud computing. These include reduced latency, enhanced security, and improved resilience. The localized nature of processing minimizes the time it takes for data to travel to a central server, significantly impacting applications requiring rapid responses. This localized processing enhances security by reducing the exposure of sensitive data to potential breaches during transmission.

Moreover, edge computing’s distributed architecture makes it more resilient to network outages or failures, as processing occurs at multiple points in the network.

Fundamental Principles Driving the Shift

The shift towards edge computing is driven by several fundamental principles. The exponential growth in data volume generated by various sources, including IoT devices and sensors, necessitates a more distributed approach to processing. The increasing demand for real-time applications, such as autonomous vehicles and industrial control systems, requires low latency and high responsiveness, making centralized cloud processing inadequate.

Furthermore, concerns about data security and privacy are driving the need for processing data closer to the source, reducing the risk of data breaches during transmission.

Types of Edge Devices and Their Roles

A wide variety of devices act as edge computing nodes, each playing a specific role in data processing. These devices range from small, low-power sensors to powerful gateways and edge servers.

- Sensors: These devices collect raw data from the environment. Examples include temperature sensors in industrial settings, motion detectors in security systems, and pressure sensors in oil pipelines. These sensors often have limited processing capabilities, relying on edge gateways to process and transmit the data.

- Gateways: These act as intermediaries, receiving data from sensors and preparing it for transmission to edge servers or cloud platforms. They often perform basic data aggregation, filtering, and pre-processing, improving efficiency.

- Edge Servers: These devices have more processing power than gateways, enabling complex data analysis and machine learning tasks closer to the source. They are often used in industrial automation, smart cities, and autonomous vehicles.

These various edge devices, each with specific functionalities, contribute to the comprehensive edge computing ecosystem. The synergy between these devices allows for a more distributed and efficient approach to data processing.

Data Processing Methods at the Edge

Edge computing introduces a paradigm shift in data processing, moving the computational load closer to the data source. This proximity significantly impacts how data is processed, demanding specialized approaches. Crucially, these methods must balance speed and resource utilization while maintaining data integrity and security.Different data processing methods are employed at the edge to accommodate diverse use cases and performance requirements.

Real-time analysis, focusing on immediate responses, contrasts with batch processing, which aggregates data over time. Understanding the nuances of each approach is key to optimizing edge computing deployments.

Real-Time Analysis

Real-time analysis at the edge prioritizes immediate data processing and response. This method is essential for applications requiring rapid feedback, such as autonomous vehicles, industrial control systems, and video surveillance. The speed and responsiveness are critical to the functionality of these applications. For instance, a self-driving car needs instant reaction times to avoid obstacles, while a security camera needs to recognize suspicious activity in real-time.

Batch Processing

Batch processing at the edge involves aggregating data over a period and then processing it in larger batches. This approach is suitable for tasks that do not require immediate results, such as sensor data aggregation, anomaly detection, and predictive maintenance. The large datasets involved often benefit from offline analysis and powerful algorithms. Examples include processing sensor data from a factory over a 24-hour period to identify equipment anomalies or aggregating customer purchase data to predict future trends.

Comparison of Data Processing Methods

| Characteristic | Real-Time Analysis | Batch Processing |

|---|---|---|

| Latency | Extremely low (milliseconds to seconds) | Higher (seconds to minutes or hours) |

| Data Volume | Typically smaller, focused on current data streams | Potentially large, accumulating data over time |

| Security | Requires robust security measures to protect sensitive data transmitted in real-time | Security concerns may be mitigated by processing data offline or in a secure environment |

| Resource Requirements | Requires powerful processors and low-latency networks to maintain speed | Requires storage capacity and computational resources to process large datasets |

| Use Cases | Autonomous vehicles, industrial control systems, video surveillance | Sensor data aggregation, anomaly detection, predictive maintenance, and trend analysis |

Impact on Data Volume and Velocity

Edge computing significantly alters how we manage the massive influx of data from connected devices. Traditional centralized data centers struggle with the sheer volume and speed at which this data is generated, leading to latency and processing bottlenecks. Edge computing’s distributed architecture addresses these challenges by processing data closer to its source.Edge computing empowers efficient management of data volume and velocity, transforming how data is collected, processed, and acted upon.

This localized processing reduces the burden on central servers, optimizing performance and enabling real-time responses. It’s a paradigm shift from centralized processing to a more distributed, responsive model.

Data Volume Management at the Edge

The sheer volume of data generated by connected devices necessitates specialized storage and processing techniques at the edge. Data filtering and aggregation techniques are crucial for minimizing the amount of data transmitted to the cloud. Only relevant data points are sent for further processing, reducing network congestion and storage costs. For example, in industrial IoT settings, sensor data can be pre-filtered at the edge to identify anomalies or significant changes, only transmitting these critical events to the cloud.

Data Velocity Management at the Edge, Edge computing revolution in data processing methods

Data velocity, the speed at which data is generated and needs to be processed, is a critical factor in edge computing. Real-time analysis and decision-making are often crucial for applications like autonomous vehicles or industrial control systems. Edge devices need to process data rapidly to enable timely responses. This often involves employing specialized hardware and algorithms optimized for high-speed data processing.

For instance, in a smart city application, edge devices might immediately identify traffic congestion and adjust traffic light timing without waiting for data transmission to a central server.

Impact on Data Storage and Management Strategies

Edge computing profoundly impacts data storage and management strategies. Local storage solutions at the edge, often combined with data compression and caching techniques, are critical. This enables rapid access to data without relying on remote servers. This also allows for the development of localized data analytics applications, enabling quicker responses to changing conditions and optimizing resource utilization.

Effect of Data Volume and Velocity on Edge Computing Performance

| Factor | High Volume | High Velocity | Edge Computing Performance |

|---|---|---|---|

| Data Volume | Large datasets generated by numerous devices | Relatively smaller datasets, but generated rapidly | Edge devices require robust storage solutions and efficient data filtering algorithms |

| Data Velocity | Impacts response time to critical events | Real-time processing requirements, leading to latency issues | Edge devices need high-speed processing capabilities and low-latency communication |

| Data Volume & Velocity | Combined effect leads to high processing load on edge devices | Simultaneous handling of massive amounts of data and demanding real-time processing | Efficient data filtering, aggregation, and compression techniques are critical |

Security Considerations in Edge Computing

Edge computing, while offering numerous advantages in data processing, introduces unique security challenges. The distributed nature of edge devices, often deployed in remote or less secure environments, necessitates robust security measures to protect sensitive data throughout the entire pipeline. The security of data at the edge directly impacts the overall trustworthiness and reliability of the system.

Security Concerns at the Edge

Edge devices, frequently resource-constrained, can be vulnerable to various attacks. These devices often operate with limited or no direct human supervision, making them susceptible to malware or unauthorized access. Compromised edge devices can potentially compromise the entire network, leading to data breaches or service disruptions. Data breaches at the edge can also result in significant financial losses and reputational damage.

The diverse range of devices and operating systems used in edge deployments introduces a complex landscape of potential vulnerabilities.

Security Measures in the Edge Computing Pipeline

Implementing robust security measures across the entire edge computing pipeline is crucial. These measures must address data protection at every stage, from data collection to analysis and transmission. Data encryption, access control, and secure communication protocols are essential components of a comprehensive edge security strategy. Employing intrusion detection and prevention systems (IDPS) to monitor for malicious activity and quickly respond to threats is another key element.

Data Encryption and Access Control

Data encryption plays a vital role in safeguarding sensitive data transmitted and stored at the edge. Strong encryption algorithms, such as AES-256, should be used to protect data in transit and at rest. Access control mechanisms, such as role-based access control (RBAC), should be implemented to restrict access to sensitive data based on user roles and permissions. This ensures only authorized personnel can access and manipulate data.

Security Vulnerabilities and Mitigation Strategies

| Potential Security Vulnerability | Mitigation Strategy |

|---|---|

| Unauthorized Access to edge devices | Implementing strong passwords, multi-factor authentication (MFA), and network segmentation to isolate edge devices. |

| Malware Infection of edge devices | Regular software updates, antivirus/anti-malware solutions, and secure boot mechanisms. |

| Data breaches during transmission | Employing end-to-end encryption for all data transmitted between edge devices and the cloud or central servers. |

| Lack of device hardening | Implementing robust device hardening procedures, including disabling unnecessary services and ports, and patching known vulnerabilities. |

| Insider Threats | Implementing strict access control policies, regular security awareness training for personnel, and monitoring user activity. |

| Insufficient Security Training | Regular security awareness training for personnel involved in edge device management and data handling. |

Applications and Use Cases

Edge computing is transforming data processing, enabling real-time insights and faster decision-making across diverse industries. This shift allows for the processing of massive amounts of data closer to the source, minimizing latency and maximizing efficiency. The localized processing reduces the strain on central servers, improving overall system performance and scalability.

Smart Cities

Smart city initiatives leverage edge computing for various applications, including traffic management, environmental monitoring, and public safety. Edge devices collect real-time data from sensors placed throughout the city, enabling rapid responses to events like traffic congestion or pollution spikes. Processing data locally allows for quicker adjustments to traffic signals or alerts to residents about air quality issues. This immediate feedback loop improves the overall efficiency and quality of life for city residents.

Industrial Automation

Edge computing empowers industrial automation by processing data generated from machines and equipment in real-time. This localized processing allows for quicker identification of potential malfunctions, enabling predictive maintenance and minimizing downtime. Real-time insights improve operational efficiency and safety in manufacturing and other industrial settings. For instance, sensors on factory equipment can detect early signs of wear and tear, triggering maintenance schedules before major breakdowns occur, thereby avoiding costly repairs and production halts.

Retail and Customer Experience

Edge computing enhances customer experience in retail settings. Real-time data from in-store sensors and customer interactions can personalize shopping experiences, optimizing inventory management, and improving staff performance. Edge devices in stores can track customer flow, identify popular items, and adjust pricing in real-time to maximize sales. This personalized approach, powered by localized data processing, creates a more efficient and engaging shopping experience for customers.

Healthcare

Edge computing revolutionizes healthcare by enabling real-time analysis of patient data. Remote patient monitoring systems, equipped with edge devices, collect and process vital signs and health information in real-time. This enables prompt responses to critical health events, improving patient outcomes. For example, a fall detection system using edge computing can alert medical personnel immediately, allowing for faster intervention.

Similarly, analysis of patient data can lead to early detection of potential health issues, allowing for preventive measures and reducing hospital readmissions.

Autonomous Vehicles

Edge computing is critical for autonomous vehicles, enabling the real-time processing of sensor data from cameras, radar, and lidar. The localized processing of this vast amount of data is essential for safe and reliable navigation. The high volume of data generated from these sensors necessitates processing at the edge to avoid latency and maintain responsiveness. This capability allows for immediate adjustments to vehicle movements, maintaining safety in complex and unpredictable driving conditions.

Future Trends and Developments

The edge computing landscape is rapidly evolving, driven by the increasing demand for real-time data processing and analysis closer to the source. This evolution is fueled by advancements in hardware, software, and communication technologies, paving the way for innovative applications across diverse sectors. Forecasting the future of edge computing requires understanding the emerging trends and their potential impact on various industries.

Emerging Trends in Edge Computing

Several key trends are shaping the future of edge computing. These include the increasing adoption of AI and machine learning at the edge, the rise of specialized hardware optimized for edge tasks, and the growing importance of secure and reliable communication networks. The convergence of these trends will lead to more intelligent and responsive edge systems.

Impact on Industries and Sectors

The impact of edge computing is anticipated to be profound across numerous industries. For example, in manufacturing, real-time data analysis from sensors can enable predictive maintenance, optimizing production efficiency and minimizing downtime. In healthcare, edge computing can facilitate the development of remote patient monitoring systems, enabling proactive healthcare management. Moreover, in the retail sector, edge computing can personalize customer experiences by analyzing real-time data on customer behavior.

Key Research Areas and Innovations

Significant research and innovation are driving the future of edge computing. Key areas include the development of new algorithms and architectures for distributed data processing, the design of energy-efficient edge devices, and the creation of robust security protocols for edge networks. These advancements are vital for unlocking the full potential of edge computing.

Growth and Adoption of Edge Computing

The adoption of edge computing is expected to accelerate significantly in the next decade. This growth is driven by factors such as the increasing availability of powerful and affordable edge devices, the growing demand for low-latency data processing, and the rising need for data privacy and security. For instance, the proliferation of IoT devices generates an immense volume of data that necessitates processing at the edge.

Specific Examples of Future Applications

“Edge computing’s potential extends beyond the traditional applications, enabling entirely new use cases.”

- Autonomous Vehicles: Real-time data processing at the edge allows for faster and more accurate decision-making, enabling safer and more efficient autonomous driving experiences. Data processing at the edge allows for quicker reactions to unforeseen situations, improving the safety of self-driving vehicles.

- Smart Cities: Edge computing can be used to manage traffic flow, optimize energy consumption, and enhance public safety. Real-time data from sensors and cameras allows for rapid responses to incidents, improving the efficiency of urban services.

- Remote Healthcare: Real-time data analysis at the edge allows for faster diagnosis and treatment, enabling better patient care. This facilitates prompt responses to health issues, improving patient outcomes.

Infrastructure and Architecture

Edge computing’s success hinges on a robust and adaptable infrastructure. This infrastructure must be capable of handling diverse data streams, processing tasks at the edge, and ensuring secure communication between various components. This section delves into the critical components and architectures underpinning edge computing deployments.The fundamental building blocks of edge computing infrastructure are diverse, ranging from the hardware that collects and processes data to the software that orchestrates the entire process.

Understanding the interplay between these components is crucial for successful deployment and optimization.

Components of an Edge Computing Infrastructure

A typical edge computing infrastructure comprises several interconnected components. These components work in tandem to facilitate data processing, storage, and communication at the edge. Key components include:

- Edge Devices: These devices, ranging from sensors and actuators to gateways and routers, collect data from the physical environment. They may be specialized hardware or embedded systems tailored to specific tasks, or they may be standard computers or laptops. Their role is critical, as they act as the first point of contact for data acquisition and initial processing.

- Edge Gateways: These gateways act as intermediaries between edge devices and the cloud or central data centers. They aggregate data from multiple edge devices, perform preliminary processing, and secure communication paths. This aggregation and preliminary processing is critical for reducing the volume of data transmitted to the cloud, thus lowering latency and costs.

- Edge Servers: These servers, often specialized for edge computing, are located at the edge of the network. They perform computations, store data temporarily, and manage communication between edge devices and the cloud. They may vary in processing power and storage capacity based on the requirements of the specific application.

- Communication Networks: Reliable and high-bandwidth communication networks are essential for data transmission between edge devices, gateways, servers, and the cloud. Low latency and high throughput are critical considerations in edge computing.

- Software Platforms: These platforms provide the necessary tools for managing and orchestrating edge computing tasks. This includes tools for monitoring, maintenance, and security. The platforms facilitate integration and management of diverse edge devices and applications.

Hardware and Software Roles

The diverse hardware and software components play specific roles in the edge computing ecosystem.

- Hardware: Edge devices, gateways, and servers require specialized hardware tailored to specific processing demands. High-performance processors, sufficient memory, and appropriate storage are necessary. The choice of hardware is heavily dependent on the application, balancing cost, performance, and power consumption.

- Software: Software components such as operating systems, middleware, and application-specific software dictate how data is processed and managed. This includes tools for data analytics, machine learning models, and security protocols. Proper software configuration and maintenance are critical for the efficient operation of the edge computing system.

Examples of Edge Computing Architectures

Edge computing architectures vary depending on the specific use case.

- Industrial Automation: In industrial settings, edge servers process data from sensors in real-time, enabling rapid responses to production issues and optimizing processes. This real-time analysis minimizes downtime and improves efficiency.

- Smart Cities: Sensors collect data on traffic patterns, air quality, and other factors, enabling real-time adjustments to traffic flow and resource management. This minimizes congestion and enhances urban living.

- Retail Applications: In-store cameras and sensors collect data on customer behavior, enabling personalized recommendations and targeted marketing campaigns. This leads to enhanced customer experience and increased sales.

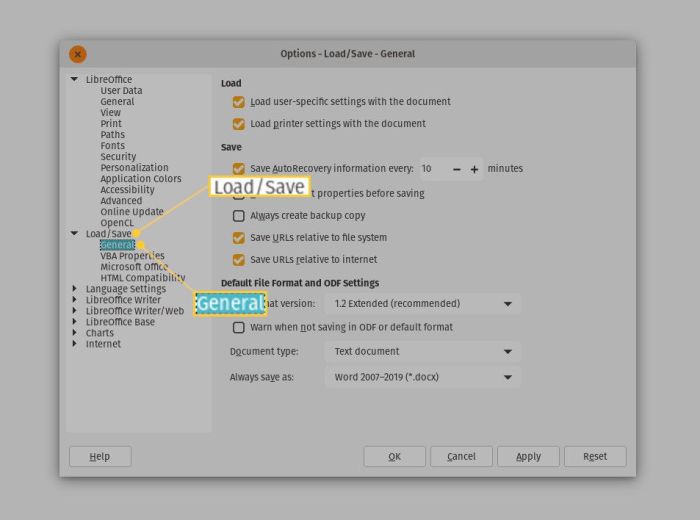

Diagram of a Typical Edge Computing Architecture

(Diagram would be presented here as a visual representation. A description follows.)A typical edge computing architecture involves interconnected components. The diagram shows edge devices (sensors, cameras) transmitting data to edge gateways. These gateways perform preliminary processing and send data to edge servers. Edge servers perform further computations and potentially store data locally.

Some data may be directly sent to the cloud for analysis and storage, while others are processed locally. A secure communication channel is shown between the edge and the cloud, highlighting the importance of security.

Scalability and Deployment

Source: medium.com

Scaling edge computing infrastructure presents unique challenges compared to traditional cloud-based systems. The distributed nature of edge deployments, coupled with the diverse hardware and software environments at the edge, necessitates careful planning and execution. Deployment strategies must account for variations in bandwidth, latency, and power constraints at different edge locations. Moreover, ensuring security and maintaining data integrity across a vast and dispersed network of devices is critical.Effective scaling strategies and deployment methods are paramount for realizing the full potential of edge computing.

These strategies need to address the specific requirements of diverse edge environments, from industrial settings to smart city applications. This includes consideration for the different deployment models and their respective trade-offs, ultimately enabling seamless integration and management of edge devices and data.

Challenges in Scaling Edge Computing Infrastructure

Various factors pose challenges to scaling edge computing infrastructure. These include heterogeneity of devices and network conditions, varying bandwidth and latency, limited power resources at the edge, and security vulnerabilities across the distributed network. The lack of centralized management for a large number of devices can also hinder scaling efforts.

Strategies for Scaling Edge Computing Infrastructure

Several strategies are employed to address the scalability challenges. These include leveraging containerization technologies for standardized software deployments, employing dynamic resource allocation based on real-time needs, and adopting automated provisioning and management tools. Moreover, developing efficient data aggregation and transmission protocols is critical. These protocols should minimize data duplication and improve data transfer speed.

Deployment Methods in Various Environments

Deploying edge computing systems in diverse environments requires adapting to specific needs. This involves choosing appropriate hardware, considering network connectivity, and ensuring compatibility with existing infrastructure. For example, in industrial settings, edge devices need robust security measures and real-time data processing capabilities, whereas in smart city applications, low-latency communication and data privacy are paramount. Thorough planning and analysis are essential for appropriate device selection and deployment.

Deployment Models Comparison

Different deployment models offer varying advantages and disadvantages, each catering to specific needs and priorities. A comparison of these models is crucial for selecting the most suitable approach for a given use case.

Table of Deployment Models

| Deployment Model | Advantages | Disadvantages |

|---|---|---|

| Cloud-Based | Scalability, centralized management, cost-effectiveness (potentially), and reduced initial investment. | Potential latency issues due to data transfer to and from the cloud, security concerns regarding data transmission, and dependence on cloud infrastructure availability. |

| On-Premise | Enhanced control over data and infrastructure, lower latency, and greater security due to local management. | High initial investment, limited scalability, and potential operational complexity. |

| Hybrid | Combines the benefits of both cloud and on-premise deployments, offering flexibility to manage data and resources strategically. | Requires sophisticated integration and management of both systems, potentially adding complexity to operations and potentially increasing cost. |

Integration with Other Technologies

Edge computing’s power significantly multiplies when integrated with other advanced technologies. This synergistic approach allows for real-time data processing, enhanced decision-making, and improved efficiency in various applications. The integration of technologies like artificial intelligence (AI), the Internet of Things (IoT), and machine learning (ML) creates a powerful ecosystem for handling the deluge of data generated at the edge.The combination of edge computing with AI, IoT, and ML empowers real-time analysis and actions, leading to significant improvements in efficiency and responsiveness.

For example, in smart manufacturing, edge devices equipped with AI and ML algorithms can detect anomalies in machinery and predict potential failures, preventing costly downtime. This proactive approach translates into optimized operational efficiency and reduced maintenance costs.

AI Integration

AI algorithms can be deployed directly on edge devices, enabling immediate analysis of data without the need for constant transmission to a centralized cloud. This local processing significantly reduces latency, crucial for applications requiring real-time responses, such as autonomous vehicles and industrial automation. AI models can be trained on edge data, leading to more accurate and contextualized insights.

For instance, in a retail environment, edge AI can analyze customer behavior in real-time to personalize recommendations and optimize inventory management.

IoT Integration

The Internet of Things (IoT) generates a massive amount of data from diverse sources, including sensors, devices, and actuators. Edge computing facilitates the processing and analysis of this data at the source, reducing the load on the central infrastructure and improving data responsiveness. This integration allows for the development of more sophisticated and intelligent IoT applications, enabling efficient management of connected devices and facilitating proactive maintenance.

Smart grids, for example, can leverage edge computing to monitor energy consumption in real-time, optimize energy distribution, and predict potential outages.

Machine Learning Integration

Machine learning models, often complex and computationally intensive, can be deployed on edge devices for real-time decision-making. This decentralized approach allows for rapid processing and response, vital for applications like fraud detection and predictive maintenance. Edge ML models can be trained on the vast datasets generated by IoT devices, leading to more accurate and refined predictive models. Financial institutions, for instance, can leverage edge ML to detect fraudulent transactions in real-time, minimizing financial losses.

Synergy Table

| Technology | Edge Computing Benefit | Example |

|---|---|---|

| Artificial Intelligence (AI) | Real-time analysis, local decision-making, reduced latency | Autonomous vehicles, industrial automation |

| Internet of Things (IoT) | Data processing at the source, reduced network load, improved responsiveness | Smart grids, smart homes |

| Machine Learning (ML) | Real-time decision-making, predictive capabilities, enhanced data analysis | Fraud detection, predictive maintenance |

Wrap-Up

In conclusion, the edge computing revolution in data processing methods presents a paradigm shift in how data is handled. By bringing processing closer to the source, we gain significant advantages in speed, latency, and security. However, this shift also introduces challenges related to data volume, velocity, and security. The future of edge computing promises even more innovative applications and integrations, driving further efficiency, decision-making, and cost reduction in diverse industries.

This ongoing evolution is poised to shape the future of data processing.

Post Comment