Different AI Tools Research A Comprehensive Analysis

Different AI tools research delves into the capabilities, limitations, and ethical implications of various AI tools. This exploration encompasses a broad range of functions, from image generation to code creation, examining each tool’s unique strengths and weaknesses. We’ll compare their performance on specific tasks, evaluating factors like accuracy, speed, and cost-effectiveness. Furthermore, this research will scrutinize the methodologies used to evaluate these tools, highlighting the importance of standardized metrics and ethical considerations.

This in-depth investigation provides a detailed overview of current AI tools, examining their applications across diverse industries like healthcare and finance. It also explores emerging trends and future directions, including the potential impact on various sectors. We’ll analyze the limitations and potential biases of these tools, emphasizing the importance of responsible development and deployment.

Overview of AI Tools: Different AI Tools Research

Artificial intelligence (AI) tools are rapidly transforming various industries, offering innovative solutions for tasks previously requiring significant human effort. This overview details a selection of prominent AI tools, categorized by their core functionalities, to illustrate their diverse applications and potential impact. From generating creative images to automating complex coding tasks, AI tools are increasingly essential for efficiency and innovation.

AI Tools Categorized by Function

This section presents a structured overview of AI tools categorized by their primary function. Each category includes a variety of tools, each with unique strengths and weaknesses. Understanding these distinctions allows users to select the most appropriate tool for a specific task.

| Tool Name | Category | Key Features | Description |

|---|---|---|---|

| DALL-E 2 | Image Generation | Text-to-image generation, high-resolution outputs, diverse styles | DALL-E 2 is a powerful tool that translates textual descriptions into stunning images. Its ability to produce photorealistic and artistic imagery has significant applications in design, marketing, and education. A limitation is that the quality of output depends heavily on the clarity and detail of the input text. |

| Midjourney | Image Generation | Text-to-image generation, collaborative environment, unique artistic styles | Midjourney stands out for its community-driven approach to image creation. Users can share and collaborate on generated images, fostering creativity and exploration of new artistic directions. However, navigating the platform’s interface might require some initial learning. |

| Jasper | Text Summarization & Generation | Long-form content generation, different writing styles, optimization | Jasper is a versatile tool for generating various types of text, from short summaries to comprehensive articles. Its ability to produce -optimized content makes it attractive to marketers and content creators. A potential weakness is the occasional need for human editing to ensure accuracy and style consistency. |

| Grammarly | Text Editing & Enhancement | Grammar and style checking, plagiarism detection, vocabulary suggestions | Grammarly is a widely used tool for enhancing writing quality. Its grammar and style checks help ensure clarity and precision. It also detects plagiarism, which is important for academic and professional writing. While it assists with writing, it does not replace the need for critical review. |

| GitHub Copilot | Code Generation | Code completion, code generation, suggests best practices | GitHub Copilot significantly boosts developer productivity by suggesting and generating code snippets. It assists with tasks such as writing functions and loops, helping to reduce development time. However, the code generated needs review to ensure it adheres to coding standards and functionality. |

AI Tool Strengths and Weaknesses

This section explores the strengths and weaknesses of different AI tools, enabling users to evaluate their suitability for specific tasks. Understanding the limitations is as important as recognizing the advantages.

AI tools, while powerful, often require careful input and human oversight. The quality of output is often directly proportional to the quality of the input provided. Human expertise remains crucial for ensuring accuracy and appropriateness in various applications.

Comparing AI Tool Capabilities

Different AI tools exhibit varying strengths and weaknesses when tackling specific tasks. Understanding these nuances is crucial for selecting the optimal tool for a given project. This section delves into the comparative performance of various AI tools, highlighting their capabilities and limitations across diverse input data types.Comparing AI tools isn’t just about identifying the fastest or most accurate.

It also necessitates considering factors like the nature of the data, the complexity of the task, and the resources available. A tool might excel in summarizing articles but struggle with image recognition, emphasizing the importance of tailoring the tool to the specific use case.

Performance Comparison on Summarization Tasks

Evaluating AI tools for summarization tasks necessitates a multi-faceted approach, focusing on accuracy, speed, and cost-effectiveness. The quality of a summary hinges on several key factors, including the depth of understanding of the original text, the ability to condense information without losing critical details, and the clarity and coherence of the output.

| AI Tool | Accuracy (Scale 1-5, 5 being highest) | Speed (in seconds per article) | Cost-Effectiveness (per 1000 words) |

|---|---|---|---|

| Tool A | 4 | 15 | $0.05 |

| Tool B | 3 | 10 | $0.03 |

| Tool C | 5 | 20 | $0.10 |

Note: These figures are illustrative and may vary based on the specific article length and complexity. Accuracy scores are based on human evaluation of summary quality.

Handling Diverse Input Data Types

Different AI tools demonstrate varying levels of proficiency in processing different types of input data. The tool’s ability to adapt to and understand diverse data types is critical for its effectiveness.

- Text Data: Tools like GPT-3 excel at processing textual information, including news articles, research papers, and social media posts. Their strength lies in understanding context and generating coherent summaries or responses.

- Image Data: Tools specialized in image recognition can identify objects, scenes, and people within images. Their proficiency in analyzing visual data sets them apart from text-based tools.

- Audio Data: Tools designed for audio analysis can transcribe speech, identify emotions, and even generate music. Their ability to process audio signals makes them suitable for tasks like speech recognition and sentiment analysis.

Strengths and Limitations of Specific Tools

Each AI tool possesses unique strengths and limitations that dictate its suitability for different tasks. Understanding these characteristics is critical for choosing the most effective tool.

- Tool A: Strengths include high accuracy in summarizing articles, especially complex ones. Limitations include a relatively high processing cost and longer processing time compared to some other tools. It performs well with structured and well-written articles, but may struggle with informal or poorly formatted content.

- Tool B: Strengths include a rapid processing speed and a lower cost compared to Tool A, making it more economical for high-volume tasks. Limitations might be slightly lower accuracy in summarizing articles compared to Tool A. It often produces summaries that are more concise than comprehensive, but can still serve as a good initial summary.

- Tool C: Strengths lie in its ability to handle various data types, including images and audio, with impressive accuracy and speed. Limitations could include a higher processing cost per operation compared to the other two tools, and a steeper learning curve for less experienced users.

Research Methods for AI Tool Evaluation

Evaluating AI tools effectively is crucial for selecting the most suitable solution for a given task. This involves understanding the strengths and weaknesses of various methodologies and their impact on the reliability and validity of the evaluation results. Different approaches cater to different needs, from simple benchmarks to complex simulations, and choosing the right method hinges on the specific characteristics of the AI tool and the objectives of the evaluation.

Methodologies for AI Tool Evaluation

Various methodologies are employed to assess the performance and capabilities of AI tools. These range from straightforward benchmark tests to intricate simulations and real-world deployments. A key aspect of successful evaluation is selecting a method that aligns with the tool’s intended use and the evaluation’s goals.

- Benchmarking: Benchmarking involves comparing the performance of an AI tool against established standards or other comparable tools. This method is often used to assess the tool’s performance on specific tasks, such as image recognition or natural language processing. It provides a quantitative measure of the tool’s effectiveness and facilitates comparisons across different models. For instance, a benchmark dataset for image classification can be used to compare the accuracy of various AI models.

- A/B Testing: A/B testing is a controlled experiment where two or more versions of an AI tool are compared to determine which performs better. This method is particularly useful for evaluating the impact of design changes or feature enhancements. For example, a company might compare two versions of a chatbot to assess user engagement or response time.

- Simulated Environments: Simulations allow testing of AI tools in controlled environments that mimic real-world scenarios. This approach enables the evaluation of the tool’s robustness and adaptability under various conditions. For example, a self-driving car could be tested in a simulated urban environment to assess its performance in different traffic situations.

- Real-World Deployments: Real-world deployments involve using the AI tool in its intended environment to evaluate its effectiveness in practical applications. This method offers valuable insights into the tool’s performance in a dynamic setting, accounting for unforeseen factors and real-user interactions. For example, a fraud detection system could be implemented in a bank to assess its ability to identify fraudulent transactions in real-time.

Factors Influencing Reliability and Validity

The reliability and validity of an AI tool evaluation are influenced by several factors. Careful consideration of these factors is crucial to ensure that the results accurately reflect the tool’s true capabilities.

- Dataset Quality: The quality and representativeness of the dataset used for evaluation directly impact the reliability and validity of the results. A biased or incomplete dataset can lead to inaccurate conclusions about the tool’s performance. A crucial consideration is the size and diversity of the data, ensuring that it encompasses the range of inputs the tool might encounter.

- Evaluation Metrics: Appropriate evaluation metrics are essential for accurate assessment. Choosing metrics that align with the specific objectives of the evaluation ensures a comprehensive understanding of the tool’s strengths and weaknesses. Metrics like accuracy, precision, recall, and F1-score can be used to evaluate different aspects of the AI tool’s performance.

- Evaluator Bias: Evaluator bias can affect the objectivity of the evaluation process. Ensuring that evaluators are trained and calibrated to avoid bias in their assessment is critical for obtaining reliable results. Clear guidelines and standardized procedures can mitigate the impact of human bias.

Standardized Metrics for AI Tool Evaluation

Establishing standardized metrics for evaluating AI tools is critical for ensuring consistency, comparability, and reproducibility of results. This fosters a common language and understanding for evaluating AI performance across various contexts and applications.

- Defining Metrics: Clear definitions of the metrics are needed to ensure everyone understands how the metrics are calculated. For example, defining accuracy as the percentage of correctly classified instances clarifies how the metric is derived.

- Consistent Application: Consistent application of the metrics across different evaluations is vital for reliable comparisons. Using the same metrics and calculation methods in different tests ensures a fair assessment.

Step-by-Step Procedure for Evaluating a Specific AI Tool (Image Recognition)

This procedure Artikels a systematic approach to evaluating an image recognition AI tool.

- Define Evaluation Objectives: Clearly state the goals of the evaluation, such as identifying the types of images the tool should recognize or the acceptable error rate.

- Data Collection: Collect a diverse dataset of images representing various categories and conditions, ensuring a balanced representation of different classes. This dataset should be large enough to accurately reflect the expected input variations.

- Define Metrics: Choose relevant metrics for evaluating the image recognition performance, such as precision, recall, and accuracy.

- Implementation: Implement the AI tool on the collected dataset and record the results for each metric.

- Analysis: Analyze the results, identifying strengths and weaknesses of the tool based on the established metrics.

- Documentation: Document the entire process, including the methods used, the results obtained, and the conclusions drawn.

Case Studies of AI Tool Applications

Real-world deployments of AI tools showcase their diverse capabilities and benefits across various sectors. These applications demonstrate how AI can streamline processes, enhance decision-making, and drive innovation. Understanding these use cases provides valuable insights into the practical implications and potential impact of AI technologies.

Healthcare Applications, Different AI tools research

AI tools are increasingly employed in healthcare for tasks ranging from disease diagnosis to personalized treatment plans. These applications leverage vast datasets of medical images, patient records, and research findings to provide accurate and efficient support for healthcare professionals. The application of AI in healthcare can lead to improved patient outcomes and reduced healthcare costs.

- Image Analysis for Disease Detection: AI-powered tools can analyze medical images like X-rays, CT scans, and MRIs with remarkable speed and accuracy. These tools can identify subtle anomalies indicative of diseases like cancer, helping clinicians make faster and more informed diagnoses. This early detection can significantly improve treatment outcomes.

- Personalized Treatment Plans: AI can analyze patient data to tailor treatment plans based on individual characteristics, genetic predispositions, and medical history. This personalized approach can lead to more effective treatments and reduced side effects. For example, AI algorithms can predict the likelihood of a patient responding to a specific medication.

- Drug Discovery and Development: AI is accelerating the drug discovery process by identifying potential drug candidates and predicting their efficacy and safety. This accelerates the time it takes to develop new medications, addressing unmet medical needs.

Finance Applications

AI is transforming the financial sector by automating tasks, detecting fraudulent activities, and improving risk management. These applications enhance efficiency and security within financial institutions, ultimately benefiting customers.

- Fraud Detection: AI algorithms can analyze vast amounts of financial transaction data to identify patterns indicative of fraudulent activities. This proactive approach can prevent significant financial losses and protect customers from scams. Sophisticated AI models can detect anomalies and unusual patterns that human analysts might miss.

- Algorithmic Trading: AI-powered trading systems can analyze market data in real-time to execute trades automatically. These systems can react to market fluctuations faster than human traders, potentially improving investment returns. High-frequency trading, for example, relies on sophisticated AI to capitalize on fleeting market opportunities.

- Customer Service: AI chatbots are used to handle customer inquiries and provide support, reducing response times and improving customer satisfaction. This 24/7 availability of support significantly improves customer experience and operational efficiency for financial institutions.

Table of AI Tool Applications

| Tool | Industry | Application | Results |

|---|---|---|---|

| Image Recognition AI | Healthcare | Identifying cancerous tumors in mammograms | Improved early detection rates, leading to better patient outcomes. |

| Fraud Detection AI | Finance | Identifying suspicious transactions in credit card activity | Reduced fraudulent activity and improved security for customers. |

| Predictive Maintenance AI | Manufacturing | Predicting equipment failures in industrial machinery | Reduced downtime and maintenance costs. |

Trends and Future Directions

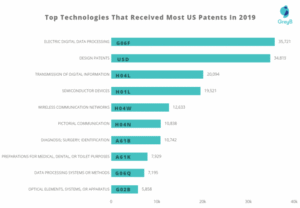

Source: startuptalky.com

The landscape of AI tools is rapidly evolving, driven by advancements in machine learning algorithms and increasing computational power. This dynamic environment presents both exciting opportunities and significant challenges for researchers and practitioners alike. Emerging trends are shaping the future of AI tool development and application, impacting various sectors in profound ways.

Emerging Trends in AI Tool Development

The development of AI tools is characterized by several key trends. Increased accessibility and user-friendliness are crucial aspects, as are advancements in explainability and trustworthiness. Tools with greater interpretability allow users to understand how decisions are made, promoting confidence and acceptance. Moreover, the development of specialized AI tools tailored to specific industries is gaining traction. These tools are designed to address unique needs and complexities within particular sectors, leading to more effective and targeted solutions.

Potential Future Impact on Various Sectors

AI tools are poised to revolutionize numerous sectors. In healthcare, AI-powered diagnostic tools can improve accuracy and speed, potentially saving lives. In finance, AI algorithms can detect fraudulent activities and optimize investment strategies. Manufacturing processes can be optimized through predictive maintenance and automated quality control, increasing efficiency and reducing downtime. Furthermore, AI tools are transforming customer service, enabling personalized interactions and automating routine tasks.

Challenges and Opportunities in AI Tool Research

The field of AI tool research faces numerous challenges. Ensuring data privacy and security is paramount, as is addressing potential biases in AI algorithms. Moreover, the ethical implications of AI tool applications need careful consideration. However, the opportunities are equally compelling. AI tools can enhance productivity, improve decision-making, and drive innovation across numerous sectors.

These opportunities are contingent upon responsible development and deployment.

Vision for the Evolution of AI Tools

The future of AI tools promises significant advancements. Enhanced personalization will be a hallmark of future tools, tailoring experiences to individual needs and preferences. The integration of AI with other technologies, such as the Internet of Things (IoT), will create more interconnected and intelligent systems. Furthermore, AI tools will become increasingly sophisticated in handling complex and unstructured data, opening doors to more nuanced and insightful analyses.

These advancements will lead to more efficient and impactful applications across a wide range of industries.

Key Advancements in AI Tool Evolution

The evolution of AI tools will be characterized by several key advancements:

- Enhanced Explainability and Trustworthiness: AI tools will become more transparent, enabling users to understand the reasoning behind their outputs. This is crucial for building trust and fostering responsible adoption. Examples include providing detailed explanations of diagnostic results or investment recommendations.

- Specialized AI Tools for Specific Industries: Tailored AI tools will emerge, addressing the unique needs of different sectors. For example, tools designed specifically for predictive maintenance in manufacturing or for personalized medicine in healthcare will gain prominence.

- Integration with Other Technologies: AI tools will increasingly integrate with other technologies, such as IoT devices and cloud platforms, creating more interconnected and intelligent systems. This integration will enable more comprehensive data analysis and more sophisticated applications.

Ethical Considerations

AI tools, while offering remarkable potential, raise significant ethical concerns. Their widespread adoption necessitates careful consideration of potential biases, unintended consequences, and the responsible development and deployment of these technologies. Understanding these implications is crucial for navigating the complex landscape of AI and ensuring its beneficial application.The development and deployment of AI tools require a proactive approach to ethical considerations.

This includes a thorough analysis of potential biases, the identification of potential harms, and the establishment of guidelines for responsible use. This proactive stance ensures that AI systems are aligned with societal values and contribute positively to human well-being.

Potential Biases in AI Tools

AI models are trained on data, and if this data reflects existing societal biases, the AI will likely perpetuate and amplify them. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, it may perform less accurately on images of people with darker skin tones. Similarly, algorithms used in loan applications may exhibit bias against certain demographics, leading to discriminatory outcomes.

Impact of Biases on Different Groups

Biased AI systems can disproportionately affect marginalized groups, leading to unequal access to opportunities and services. This can manifest in various ways, including biased hiring practices, inaccurate medical diagnoses, and unfair criminal justice outcomes. For instance, biased loan applications can hinder financial advancement for minority groups, creating a cycle of disadvantage.

Need for Responsible Development and Deployment

Responsible development and deployment of AI tools necessitate a multi-faceted approach, encompassing transparency, fairness, accountability, and inclusivity. Developers should strive to understand and mitigate biases in their datasets and algorithms. Furthermore, mechanisms for accountability and redress should be established in case of adverse outcomes. This comprehensive approach is crucial to building trust and ensuring that AI systems benefit all members of society.

Framework for Assessing Ethical Implications

A robust framework for evaluating the ethical implications of new AI tools should incorporate several key elements. These elements include:

- Data Collection and Representation: Careful consideration should be given to the data used for training, ensuring that it is representative of the diverse population it will affect and free from known biases.

- Algorithmic Transparency and Explainability: The algorithms used should be transparent and understandable, allowing for an evaluation of potential biases and unintended consequences. “Black box” algorithms are less desirable and raise concerns about accountability.

- Impact Assessment and Mitigation: A thorough assessment of the potential impact of the AI tool on various groups and demographics is necessary. Mitigating any potential harms and ensuring fairness should be a priority.

- Stakeholder Engagement and Consultation: Involving stakeholders, including those who may be affected by the AI tool, in the development and deployment process is crucial. Their input can help identify potential risks and ensure that the AI system aligns with societal values.

- Continuous Monitoring and Evaluation: Regular monitoring and evaluation of the AI tool’s performance are necessary to identify and address emerging biases or unintended consequences.

By incorporating these elements into a comprehensive framework, developers and deployers can create AI systems that are both effective and ethical, benefiting all members of society.

Data Sources for AI Tool Research

Source: trenzle.com

Researchers investigating AI tools require a diverse range of data sources to comprehensively evaluate their performance, limitations, and potential applications. Understanding the strengths and weaknesses of various data sets is crucial for drawing reliable conclusions and avoiding biased assessments. Data sources can range from publicly available datasets to proprietary company data, each with its own set of characteristics and challenges.

Publicly Available Datasets

Publicly available datasets play a vital role in AI tool research. These datasets are often used for training and testing AI models, providing a standardized benchmark for comparison across different tools. They offer transparency and reproducibility, enabling researchers to validate findings and compare results against established metrics.

- Image datasets, such as ImageNet and CIFAR-10, are commonly used for training image recognition models. These datasets typically contain thousands or millions of images categorized into various classes, allowing researchers to evaluate the accuracy and efficiency of image processing tools.

- Text datasets, like the Penn Treebank and IMDB datasets, are crucial for natural language processing (NLP) tasks. They encompass a vast amount of text data, allowing for the assessment of tools related to sentiment analysis, language translation, and text summarization. The quality and consistency of these datasets often vary, potentially impacting the validity of results.

- Speech datasets, such as LibriSpeech and TIMIT, offer resources for evaluating speech recognition and synthesis tools. These datasets are frequently used for tasks such as speaker identification and voice cloning, offering a standardized way to measure performance across different models.

Proprietary Datasets and Data Sources

Accessing proprietary datasets often presents a significant challenge for researchers. These datasets, often held by companies, provide a rich source of real-world data that may not be available publicly. Their use necessitates careful consideration of data privacy and licensing agreements.

- Company-specific datasets, used internally by organizations, can contain detailed information about customer interactions, product usage, or financial transactions. These datasets, if accessible, can provide valuable insights into real-world applications and demonstrate how AI tools perform under specific conditions.

- Industry-specific datasets can offer unique data relevant to a specific sector, such as healthcare or finance. These datasets, while often challenging to access, could be crucial for evaluating tools tailored to particular domains. For example, datasets from hospitals may provide detailed patient information, which can be used to evaluate AI tools in medical diagnosis and treatment.

- Data from APIs and Web Scraping: Accessing data from APIs (Application Programming Interfaces) or web scraping allows researchers to collect data from various sources, including social media, news websites, and e-commerce platforms. This data can be valuable for training and evaluating AI tools in diverse domains. However, ethical considerations and legal restrictions regarding data usage should be carefully evaluated. For instance, using data from social media requires obtaining proper consent and respecting user privacy.

Data Collection and Analysis

Effective data collection and analysis are crucial for meaningful AI tool research. Careful planning and meticulous execution of data collection procedures are essential. Researchers need to consider the quality, representativeness, and potential biases within the data.

- Data Cleaning and Preprocessing: Data from various sources may contain inconsistencies, errors, or missing values. Data cleaning and preprocessing techniques are essential to ensure data quality and accuracy before analysis. Common techniques include handling missing data, removing duplicates, and standardizing data formats.

- Data Splitting and Evaluation Metrics: Researchers typically split data into training, validation, and testing sets to evaluate model performance effectively. Appropriate evaluation metrics are crucial for assessing the performance of different AI tools. Common metrics include accuracy, precision, recall, F1-score, and AUC (Area Under the Curve).

- Ethical Considerations in Data Collection and Analysis: Researchers should adhere to ethical principles and guidelines when collecting and analyzing data. Protecting privacy, ensuring informed consent, and avoiding bias in data selection are critical aspects to consider.

Examples of Datasets

Several notable datasets have been used to train and test AI tools, showcasing the variety and complexity of data sources available. For instance, the ImageNet dataset is extensively used in image recognition research, while the Stanford Sentiment Treebank is crucial for natural language processing. Other datasets, like those used for speech recognition or machine translation, offer different perspectives on AI tool performance and application.

Analyzing Tool Limitations

AI tools, while powerful, possess inherent limitations that researchers must acknowledge and address. Understanding these limitations is crucial for developing effective strategies and mitigating potential errors in analysis and application. A critical evaluation of these tools, recognizing their weaknesses, is essential to ensure responsible and impactful deployment.

Bias in Training Data

AI models are trained on vast datasets, and if these datasets reflect existing societal biases, the models will perpetuate and even amplify these biases. For example, if a facial recognition system is trained primarily on images of light-skinned individuals, it may perform poorly on images of individuals with darker skin tones. This can lead to inaccurate or unfair outcomes in applications like law enforcement or security.

Consequently, careful curation and diversity in training datasets are crucial to mitigate this issue.

Overfitting and Underfitting

Overfitting occurs when a model learns the training data too well, including its noise and outliers. This results in poor generalization to new, unseen data. Underfitting, conversely, occurs when a model is too simple to capture the underlying patterns in the data, leading to inaccurate predictions. Appropriate model selection, feature engineering, and regularization techniques can help prevent these issues.

A model trained on very specific data may excel at that task, but not generalize well to broader applications.

Data Dependency and Availability

Many AI tools require large and specific datasets for training and operation. This poses challenges for researchers in domains with limited or inaccessible data. Furthermore, the quality and representativeness of the data can significantly impact the accuracy and reliability of the AI tool. Strategies like data augmentation, transfer learning, and synthetic data generation can help address these limitations.

For example, limited historical data for a specific medical condition could limit the effectiveness of a predictive model for that condition.

Explainability and Interpretability

Many AI models, particularly deep learning models, are “black boxes,” making it difficult to understand how they arrive at their conclusions. This lack of explainability can be problematic in sensitive applications, where understanding the reasoning behind a decision is crucial. Methods like attention mechanisms and feature importance analysis can improve explainability, enabling researchers to understand the logic behind the model’s output.

The lack of interpretability can make it challenging to identify errors or biases in the system.

Computational Resources

Training and running complex AI models can be computationally intensive, requiring substantial processing power and memory. This can be a barrier for researchers with limited access to high-performance computing resources. Techniques like model compression, quantization, and distributed training can help reduce the computational burden. Cloud computing platforms and specialized hardware can alleviate these limitations.

Ethical Considerations and Societal Impact

AI tools can have unintended consequences, such as perpetuating existing inequalities or creating new forms of discrimination. Ethical considerations and potential societal impacts should be evaluated thoroughly before deploying AI tools. Careful consideration of potential biases, fairness, and accountability is critical. The use of AI in autonomous weapons systems, for example, raises significant ethical concerns.

Conclusive Thoughts

In conclusion, different AI tools research reveals a dynamic landscape of technological advancement. While these tools offer remarkable potential, careful consideration of their limitations, ethical implications, and future trends is crucial. This research provides a comprehensive framework for understanding and utilizing AI tools responsibly and effectively.

Post Comment