How Ai Is Revolutionizing The Computer Industry And Its Future Impact

How AI is Revolutionizing the Computer Industry and Its Future Impact. Forget clunky interfaces and slow processes. AI is breathing new life into the tech world, from the intricate circuits powering our devices to the sleek software that runs them. Imagine a future where computers anticipate your needs, adapt to your style, and solve problems faster than you can blink.

This isn’t science fiction; it’s the dawn of a new era in computing, fueled by intelligent algorithms and innovative hardware.

This article dives deep into the groundbreaking ways AI is transforming the computer industry. We’ll explore how AI is impacting everything from the microchips in your phone to the complex software powering cloud services. Get ready to witness how AI is not just improving existing systems but also paving the way for entirely new possibilities in the digital realm.

Introduction to AI in Computing

Artificial intelligence (AI) is rapidly transforming the computer industry, moving beyond theoretical concepts to become an integral part of everyday computing. From personalized recommendations to sophisticated image recognition, AI is quietly revolutionizing how we interact with technology and solve complex problems. This pervasive influence is evident in a wide range of applications, fundamentally altering the landscape of software development, hardware design, and user experience.AI’s impact extends far beyond the obvious; it’s driving innovation in areas like cybersecurity, data analysis, and even the creation of new types of computer hardware.

The fundamental principles of AI, including machine learning and deep learning, are the engines powering these advancements, enabling computers to learn from data, identify patterns, and make predictions. This shift is not just about faster processors or more memory; it’s about fundamentally rethinking how computers work and interact with the world.

Current Impact on the Computer Industry

AI is fundamentally changing how we use and interact with computers. It’s influencing every aspect of the computing experience, from the applications we use to the hardware we interact with. AI-powered tools are automating tasks, enhancing user experiences, and improving the overall efficiency of computing systems. This transformation is occurring across various application domains.

Key Areas of Computing Where AI is Making a Difference

AI is significantly impacting numerous computing domains. Its applications are becoming more pervasive, affecting everything from how we search the internet to how we design and manufacture computer chips. Machine learning algorithms are powering intelligent search engines, personalized recommendations, and sophisticated image recognition tools. This is just a glimpse of the broad reach of AI in the computing world.

Fundamental Principles of AI Driving Change

The core principles driving these advancements are machine learning and deep learning. Machine learning algorithms allow computers to learn from data without explicit programming. Deep learning, a subset of machine learning, uses artificial neural networks with multiple layers to process complex data and extract intricate patterns. These sophisticated techniques allow computers to tackle tasks previously considered impossible for machines to perform, like understanding human language and recognizing images with exceptional accuracy.

“The ability to learn from data without explicit programming is a key differentiator of AI in modern computing.”

Examples of AI-Powered Tools and Software

AI-powered tools and software are already integrated into various computer applications. These tools offer enhanced functionality and improved user experiences. Examples include: smart assistants like Siri and Alexa, image recognition software in photo editing apps, spam filters in email clients, and personalized recommendations on streaming services. These examples highlight the broad application of AI in daily computer interactions.

AI in Computing Applications: A Detailed Overview

This table provides a concise overview of AI’s applications in computing, outlining the techniques used, benefits, and challenges.

| Application | AI Technique Used | Benefits | Challenges |

|---|---|---|---|

| Image Recognition | Convolutional Neural Networks (CNNs) | Improved accuracy in object detection, image classification, and analysis. | Potential for bias in training data, high computational cost. |

| Natural Language Processing (NLP) | Recurrent Neural Networks (RNNs), Transformers | Enhanced understanding and processing of human language, enabling chatbots, translation services, and text summarization. | Handling ambiguity and nuances in human language, ensuring ethical and responsible use of language models. |

| Predictive Maintenance | Machine Learning algorithms | Proactive identification of potential equipment failures, optimizing maintenance schedules, and reducing downtime. | Data collection and analysis requirements, potential for misinterpretation of data leading to inaccurate predictions. |

| Cybersecurity | Machine Learning algorithms | Improved detection and prevention of cyber threats, including malware and phishing attacks. | Balancing security with privacy concerns, ensuring the model’s adaptability to evolving threats. |

AI’s Impact on Hardware

AI is rapidly transforming the computer industry, and this revolution isn’t just about software. The very architecture of our computers, from the silicon chips to the cooling systems, is being profoundly influenced by the rise of artificial intelligence. This impact is evident in how AI is shaping the design and development of hardware, optimizing performance, and paving the way for entirely new classes of specialized machines.The evolution of hardware is no longer solely driven by Moore’s Law.

AI is now a critical factor, pushing the boundaries of what’s possible in terms of speed, efficiency, and specialized functionalities. This shift is not just about faster processors; it encompasses a holistic reimagining of the entire computing ecosystem.

AI-Driven Hardware Design

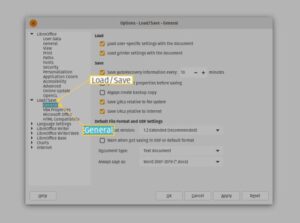

AI is streamlining the complex design process of computer hardware. Algorithms can analyze vast datasets of simulation results, material properties, and manufacturing constraints to identify optimal designs. This leads to more efficient chip layouts, better heat dissipation, and more compact hardware. For example, AI-powered tools are now used to design circuit boards with reduced signal interference and improved power efficiency.

This approach allows engineers to explore a significantly wider design space, surpassing the limitations of traditional methods.

Optimizing Hardware Components

AI is significantly improving the performance and efficiency of various hardware components. In processors, AI algorithms can dynamically adjust clock speeds and power consumption based on real-time workload demands. This allows for greater energy efficiency, preventing unnecessary energy waste. Similarly, AI can optimize memory allocation, reducing latency and improving overall system responsiveness. The use of AI in memory management systems, for instance, can enhance the performance of large-scale data processing tasks.

The Future of Computer Chips

AI is reshaping the future of computer chips, moving beyond traditional architectures. New chip designs are emerging, specifically tailored for AI tasks, with features like dedicated hardware accelerators for deep learning operations. These specialized chips are often more energy-efficient and faster at performing AI-intensive computations compared to general-purpose processors. This trend is already evident in the development of hardware optimized for tasks like image recognition and natural language processing.

Specialized Hardware for AI Tasks

The demand for specialized hardware for AI tasks is rapidly increasing. This includes field-programmable gate arrays (FPGAs) and application-specific integrated circuits (ASICs) tailored for specific AI workloads. These custom designs offer significant performance improvements compared to general-purpose hardware when performing AI-related computations. Companies are increasingly investing in the development of such specialized hardware to meet the demands of AI applications in areas like autonomous vehicles, medical imaging, and financial modeling.

AI’s Impact on Hardware: A Summary

| Hardware Component | AI Influence | Expected Performance Improvement | Future Trends |

|---|---|---|---|

| Processors | Dynamically adjusting clock speeds and power consumption | Increased energy efficiency, reduced latency | Specialized AI processors, neuromorphic chips |

| Memory | Optimized memory allocation, reduced latency | Improved system responsiveness, faster data retrieval | Adaptive memory architectures |

| Chip Design | Analysis of vast datasets, simulation results, material properties | Improved efficiency, compact designs, reduced signal interference | Custom-designed AI chips, FPGAs, and ASICs |

| Cooling Systems | Optimizing thermal management | Enhanced heat dissipation, extended lifespan | AI-driven predictive maintenance |

AI’s Role in Software Development

AI is rapidly transforming the software development landscape, moving beyond simple automation to a more profound level of intelligent assistance. It’s no longer just about faster coding; AI is fundamentally changing how software engineers approach their work, leading to more efficient, adaptable, and innovative solutions. From generating code snippets to meticulously testing and debugging complex systems, AI is proving to be an invaluable partner in the development process.

Accelerating Software Development Processes

AI is streamlining software development in multiple ways. By automating repetitive tasks, AI frees up engineers to focus on higher-level design and problem-solving. This shift in focus leads to increased productivity and a faster time to market for new software applications. Tools powered by AI can analyze codebases, identify potential errors, and suggest improvements, all significantly accelerating the development lifecycle.

The result is faster development cycles and the ability to create more robust software solutions in a shorter time frame.

Automating Tasks and Improving Efficiency

AI is automating many tedious and time-consuming tasks that software engineers traditionally handled manually. This automation includes tasks like code generation, testing, and documentation. AI tools can analyze existing code, identify patterns, and generate new code snippets, significantly reducing the amount of manual coding required. This automated assistance allows engineers to concentrate on the more complex aspects of software development, leading to greater efficiency and productivity.

AI can also automate repetitive testing procedures, ensuring that the software functions as intended and catching potential bugs earlier in the development process.

AI for Code Generation, Testing, and Debugging

AI-powered code generation tools are becoming increasingly sophisticated, capable of producing code that adheres to specific coding styles and functionalities. These tools can assist developers in generating code for common tasks, reducing the time spent on manual coding. AI also enhances the software testing process by automating the creation of test cases and running them automatically. This leads to more comprehensive testing and faster identification of bugs.

Moreover, AI tools can analyze code to identify potential errors and suggest fixes, accelerating the debugging process and preventing costly errors later in the lifecycle.

Creating User-Friendly and Adaptable Software

AI is not just about speeding up development; it’s about creating better software. By analyzing user behavior and preferences, AI can inform the design and development of more user-friendly interfaces. AI-driven tools can personalize the user experience, making the software more adaptable to individual needs. This personalized approach leads to software that is more intuitive and easier to use, ultimately enhancing user satisfaction.

Table: AI’s Impact on Software Development Tasks

| Software Development Task | AI Application | Efficiency Gain | Future Potential |

|---|---|---|---|

| Code Generation | AI-powered code generation tools | Significant reduction in manual coding time; increased speed of development | Automated generation of entire modules or components; creation of specialized code for specific domains. |

| Software Testing | Automated testing frameworks utilizing AI | Enhanced test coverage; early detection of bugs | Adaptive testing based on real-time user feedback; prediction of potential failure points. |

| Debugging | AI-powered static analysis and dynamic debugging tools | Faster identification and resolution of bugs; reduced time spent on debugging | AI-driven root cause analysis of complex software issues. |

| User Interface Design | AI tools for analyzing user behavior and preferences | More user-friendly interfaces; improved user experience | Personalized software tailored to individual user needs; AI-powered adaptive interfaces. |

AI-Driven Innovations in Specific Computing Areas

AI isn’t just changing how we think about software; it’s fundamentally altering the very architecture of computing itself. From the cloud’s ever-expanding digital sky to the intricate fortress of cybersecurity, AI is weaving its way into every nook and cranny of the digital world. This evolution isn’t just about speed and efficiency; it’s about unlocking entirely new possibilities for how we interact with technology.AI is not simply a tool; it’s a transformative force.

It’s reshaping existing paradigms and paving the way for groundbreaking new computing methods. This transformation is particularly evident in specific computing areas, where AI is not just assisting but actually revolutionizing the landscape.

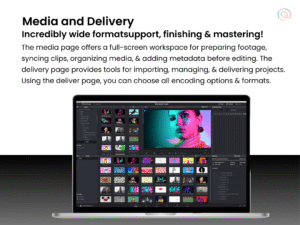

AI in Cloud Computing

Cloud computing is experiencing a surge in efficiency and scalability thanks to AI. AI-powered tools are optimizing resource allocation, predicting demand, and automating tasks. This translates into reduced costs, improved performance, and enhanced user experience. For example, AI algorithms can dynamically adjust server capacity, ensuring optimal performance without unnecessary overhead. This proactive approach is crucial for handling fluctuating workloads and guaranteeing a smooth user experience, regardless of the peak demand.

AI in Cybersecurity

Cybersecurity is a constantly evolving battleground, and AI is proving to be a powerful ally in the fight. AI algorithms can detect anomalies in network traffic and identify potential threats with unprecedented speed and accuracy. Sophisticated machine learning models are being trained on massive datasets of known attacks and patterns to identify and classify threats in real-time, enabling faster response times and mitigation strategies.

This proactive approach is critical in preventing breaches and safeguarding sensitive data.

AI in Data Analysis

Data analysis is undergoing a dramatic shift with the integration of AI. AI-powered tools are streamlining the process of extracting insights from vast datasets, automating tasks, and providing more accurate predictions. This leads to quicker decision-making, improved strategic planning, and greater understanding of complex patterns. Imagine a business using AI to analyze sales trends, customer behavior, and market fluctuations to optimize their strategies and gain a competitive edge.

| Computing Area | AI Innovation | Impact on Users/Businesses |

|---|---|---|

| Cloud Computing | AI-powered resource optimization, predictive demand modeling, automated task execution | Reduced costs, improved performance, enhanced user experience |

| Cybersecurity | AI-driven threat detection, anomaly detection, real-time threat classification | Enhanced security posture, faster response times, reduced risk of breaches |

| Data Analysis | Automated data extraction, insight generation, accurate predictions | Quicker decision-making, improved strategic planning, deeper understanding of patterns |

Future of AI in Computing

The digital landscape is rapidly evolving, and AI is poised to be the driving force behind this transformation. Imagine a future where computers seamlessly anticipate your needs, learn from your interactions, and adapt to your unique preferences. This isn’t science fiction; it’s the very real future of computing, and AI is the key. From the hardware that powers our devices to the software that shapes our experiences, AI is weaving itself into the fabric of the digital world.

A Seamlessly Integrated Future, How AI is Revolutionizing the Computer Industry and Its Future Impact

The future of computing, intertwined with AI, promises a world where devices anticipate user needs, learn from interactions, and adapt to individual preferences. Imagine a computer that understands your workflow and suggests the optimal tools for the task at hand, or a smart home that learns your routines and adjusts lighting, temperature, and security settings accordingly. This level of personalized and anticipatory intelligence is the essence of AI-driven computing.

The emphasis shifts from explicit instructions to implicit understanding, where the system understands the “why” behind the user’s actions.

Expected Trends and Advancements

Several key trends are shaping the future of AI-powered computing. Increased processing power, fueled by advancements in hardware and specialized chips designed for AI tasks, will allow for more complex and sophisticated algorithms. Furthermore, the development of more efficient and adaptable machine learning models will lead to faster and more accurate predictions and decisions. We’ll see a rise in explainable AI, where the reasoning behind AI decisions becomes transparent and understandable, fostering trust and wider adoption.

Finally, the integration of AI into everyday devices, from smartphones to wearables, will become increasingly seamless, blurring the lines between the physical and digital worlds.

Potential Challenges and Limitations

Despite the immense potential, AI in computing faces significant challenges. Data bias can lead to unfair or discriminatory outcomes, requiring careful data curation and model training. The complexity of some AI systems can make them difficult to debug and maintain, necessitating robust testing and validation procedures. Ensuring the ethical implications of AI are addressed is crucial, including considerations of privacy, security, and accountability.

Finally, the potential for job displacement due to automation needs careful consideration and proactive workforce adaptation strategies.

Impact on Jobs and the Workforce

The integration of AI into the computing industry will undoubtedly impact the workforce. While some jobs may become obsolete, new roles and opportunities will emerge. The demand for professionals skilled in AI development, implementation, and ethical considerations will increase. Training programs and educational initiatives will be critical to equipping the workforce for the future of AI. Reskilling and upskilling programs are essential to ensure a smooth transition and harness the potential of AI for positive economic and societal impact.

Furthermore, human oversight and expertise will remain critical in the development and deployment of AI systems to mitigate risks and ensure responsible use.

Future Trend Analysis

| Future Trend | Description | Potential Benefits | Potential Drawbacks |

|---|---|---|---|

| AI-driven personalization | AI systems will adapt to individual preferences and needs, providing tailored experiences. | Enhanced user satisfaction, increased efficiency, improved decision-making. | Potential for privacy concerns, data overload, lack of diversity in personalization. |

| Autonomous systems | AI will power systems that can operate independently, reducing human intervention. | Increased efficiency, reduced errors, expansion into new fields. | Security risks, lack of accountability, potential for job displacement. |

| Explainable AI | AI systems will become more transparent, allowing users to understand the reasoning behind decisions. | Increased trust, improved accountability, better decision-making. | Potential complexity in explanation, trade-off between explanation and performance. |

| AI-enhanced cybersecurity | AI will play a vital role in detecting and mitigating cyber threats. | Enhanced security, reduced risk of breaches, faster response times. | Potential for misuse of AI in cyberattacks, overreliance on AI systems. |

Ethical Considerations of AI in Computing

Source: minddigital.com

The rapid advancement of AI in the computer industry brings exciting possibilities, but also significant ethical challenges. As AI systems become more sophisticated and integrated into various aspects of our lives, we must carefully consider the potential societal and individual impacts, especially the potential for bias and discrimination. These ethical considerations are crucial to ensure AI is developed and deployed responsibly.The development and deployment of AI systems necessitate a profound understanding of the ethical implications they carry.

This understanding extends beyond technical proficiency, encompassing the social, economic, and philosophical consequences of these systems. It’s not enough to build intelligent machines; we must also build ethical frameworks to guide their actions.

Potential Biases in AI Systems

AI systems are trained on vast datasets, and if these datasets reflect existing societal biases, the AI will inevitably inherit and amplify those biases. This can lead to discriminatory outcomes in areas like loan applications, hiring processes, and even criminal justice. For instance, facial recognition systems trained primarily on images of light-skinned individuals may perform poorly on darker skin tones, leading to misidentification and unfair treatment.

Societal Effects of AI-Driven Systems

The widespread adoption of AI-driven systems can have profound societal effects. Job displacement due to automation is a major concern, particularly in sectors heavily reliant on routine tasks. The potential for increased inequality and the concentration of power in the hands of a few powerful companies also warrants careful consideration. The lack of transparency in some AI systems makes it difficult to understand how decisions are made, potentially undermining trust and accountability.

Ethical Dilemmas and Challenges

AI systems present a range of ethical dilemmas and challenges. The use of AI in autonomous weapons systems raises profound questions about accountability and the potential for unintended consequences. The collection and use of personal data by AI systems necessitates robust privacy protections. The potential for AI to be used for malicious purposes, such as deepfakes or targeted misinformation campaigns, further highlights the need for responsible development and deployment.

Potential Solutions to Address Concerns

Addressing these ethical concerns requires a multi-faceted approach. This includes developing datasets that are more representative of diverse populations, incorporating fairness-aware algorithms into AI systems, and fostering greater transparency in AI decision-making processes. Regulations and guidelines are crucial to establish clear standards and expectations for the development and deployment of AI systems.

Ethical Considerations List

- Bias in Data: AI systems trained on biased datasets can perpetuate and amplify existing societal biases, leading to discriminatory outcomes.

- Job Displacement: Automation through AI can displace workers in various sectors, potentially increasing economic inequality.

- Privacy Concerns: The collection and use of personal data by AI systems raise significant privacy concerns, requiring robust protections.

- Lack of Transparency: The opacity of some AI decision-making processes can undermine trust and accountability.

- Autonomous Weapons Systems: The development and use of AI in autonomous weapons systems pose serious ethical dilemmas regarding accountability and potential for unintended consequences.

- Misinformation and Manipulation: AI can be used for malicious purposes, such as the creation of deepfakes and the spread of targeted misinformation campaigns.

Case Studies of AI Revolutionizing Computing

AI isn’t just a buzzword anymore; it’s fundamentally reshaping the computer industry. From optimizing hardware to automating software development, AI is proving its transformative power. We’ll delve into concrete examples of companies that have successfully integrated AI, examining their triumphs and tribulations to understand the lessons learned.

Google’s AI-Powered Search

Google’s search algorithm, a cornerstone of its business, has been continuously refined by AI. The initial stages focused on matching, but today’s search results leverage sophisticated AI models that understand context, intent, and user preferences. This evolution allows for more accurate and relevant search results, a significant improvement for users.

Challenges faced by Google included:

- Handling the vast volume of data and queries.

- Ensuring the accuracy and neutrality of search results, avoiding bias and misinformation.

- Keeping up with the ever-changing language and search trends.

Solutions implemented by Google included:

- Developing sophisticated machine learning models capable of processing and analyzing massive datasets.

- Implementing quality control measures to verify the accuracy and neutrality of search results.

- Continuously updating and refining their AI models to adapt to the ever-evolving digital landscape.

Positive and Negative Impacts of Google’s AI

The positive impact is undeniable: faster, more relevant search results, personalized experiences, and new avenues for information discovery. However, the potential for bias in algorithms and the ethical implications of data collection remain concerns.

Nvidia’s AI-Accelerated Graphics Processing Units (GPUs)

Nvidia, a leading graphics card manufacturer, recognized the burgeoning potential of AI and adapted its hardware to meet the demands of AI workloads. Their GPUs, optimized for parallel processing, became crucial components in training and deploying AI models. This innovation led to significant performance gains in AI applications, from image recognition to natural language processing.

Challenges faced by Nvidia included:

- Keeping pace with the evolving demands of AI, constantly upgrading their hardware to maintain its leading edge.

- Ensuring compatibility across various AI frameworks and applications.

- Maintaining affordability and accessibility for a wide range of users.

Solutions implemented by Nvidia included:

- Investing heavily in research and development to stay ahead of the curve in GPU architecture.

- Collaborating with AI developers and researchers to ensure compatibility and performance optimization.

- Strategically pricing their GPUs to cater to different user segments, from individual developers to large corporations.

Positive and Negative Impacts of Nvidia’s GPUs

The positive impact is clear: acceleration of AI research and development, increased processing speeds, and a boost in the capabilities of AI-powered applications. However, the potential for increased energy consumption and the widening gap between high-end and entry-level hardware need consideration.

Amazon’s AI-Powered Cloud Computing Services

Amazon Web Services (AWS) leverages AI to enhance its cloud computing offerings. Their AI services, such as machine learning APIs and pre-trained models, provide tools for businesses to develop and deploy AI solutions without significant in-house expertise.

Challenges faced by Amazon included:

- Maintaining the security and privacy of vast amounts of user data.

- Balancing scalability and performance for diverse and ever-growing customer needs.

- Staying ahead of the rapidly evolving landscape of AI technologies.

Solutions implemented by Amazon included:

- Implementing robust security measures to protect customer data.

- Investing in infrastructure upgrades to enhance scalability and performance.

- Developing a comprehensive suite of AI services, making them accessible and usable for a broad range of users.

Positive and Negative Impacts of Amazon’s AI

The positive impact includes enhanced scalability and accessibility of AI solutions for businesses, reduced development costs, and streamlined workflows. However, the reliance on third-party AI services may raise concerns about vendor lock-in and potential biases within the pre-trained models.

Lessons Learned

These case studies highlight the critical importance of proactive R&D, strategic partnerships, and a user-centric approach to successfully integrate AI into computing.

| Company | AI Implementation | Results/Impact |

|---|---|---|

| AI-powered search | More accurate, relevant, and personalized search results | |

| Nvidia | AI-accelerated GPUs | Increased performance and capabilities of AI applications |

| Amazon | AI-powered cloud services | Enhanced scalability, accessibility, and reduced development costs for AI solutions |

Illustrative Examples of AI in Computing

AI is rapidly transforming the computer industry, impacting everything from how we design hardware to how we create software. These innovations are driven by sophisticated algorithms and vast datasets, leading to exciting new possibilities and potential challenges. This section will illustrate AI in action across various computing applications.

AI-Powered Image Recognition in Photo Editing

Imagine a photo editing app that automatically identifies objects in a picture, suggesting adjustments like cropping, filters, or even adding creative elements. AI algorithms, particularly convolutional neural networks (CNNs), analyze the image’s pixels to identify patterns and classify objects. For example, the app might recognize a cat, a dog, or a sunset. Based on this recognition, it could suggest filters that enhance the image’s features.

This process leverages machine learning models trained on massive datasets of images. The app learns to associate specific visual features with predefined categories, allowing it to make intelligent suggestions. The result is a more intuitive and efficient photo editing experience. Users can save time and achieve better results without needing advanced photo editing skills.

Key Advantages: Automation of tasks, improved image quality, and increased user experience.

Potential Drawbacks: Accuracy issues with complex images or unusual situations, potential for bias in the training data affecting the results. Privacy concerns regarding the data used to train the models could also be an issue.

AI-Driven Personalized Learning Platforms

Consider an online learning platform that tailors lessons to each student’s individual learning style and pace. AI algorithms analyze student performance data, identifying strengths, weaknesses, and learning patterns. Based on this analysis, the platform dynamically adjusts the curriculum, providing extra support where needed and accelerating progress in areas of strength.

AI algorithms like recommendation systems and natural language processing (NLP) can analyze student interactions with the platform, including quiz scores, time spent on different modules, and questions asked. This data is used to create personalized learning paths that optimize knowledge retention and understanding. The platform can adjust difficulty levels, offer supplementary materials, or even suggest alternative learning approaches.

Key Advantages: Improved learning outcomes, greater student engagement, and a more flexible learning environment.

Potential Drawbacks: Potential for exacerbating existing inequalities if the system isn’t properly designed and monitored. Over-reliance on data analysis could lead to a loss of human interaction and creativity in the learning process.

AI-Enhanced Fraud Detection in E-commerce

Imagine an e-commerce platform that automatically flags suspicious transactions in real-time. AI algorithms analyze various transaction details, including location, payment method, and purchasing history, to identify patterns associated with fraudulent activities. For example, an unusual number of purchases from a new account in a short time frame might trigger an alert.

Machine learning algorithms, specifically anomaly detection models, are trained on vast datasets of legitimate and fraudulent transactions. The model learns to recognize subtle anomalies in transaction behavior. When a transaction deviates significantly from the learned patterns, the platform triggers an alert, allowing the merchant to intervene. This process can involve multiple layers of analysis, integrating various data sources for a more comprehensive view of the transaction.

Key Advantages: Increased security, reduced financial losses, and a more secure shopping experience for customers.

Potential Drawbacks: False positives could lead to legitimate transactions being flagged as fraudulent, causing inconvenience to customers. The complexity of fraud patterns can make it difficult to develop models that can accurately detect all types of fraud. The ethical implications of using AI to potentially deny services or access to individuals need to be considered carefully.

Last Word: How AI Is Revolutionizing The Computer Industry And Its Future Impact

In conclusion, the revolution driven by AI in the computer industry is nothing short of phenomenal. From supercharged hardware to smarter software, AI is redefining what’s possible. While challenges remain, the future of computing looks brighter than ever, thanks to the relentless innovation and transformative power of AI. The impact on jobs and society is undeniable, and it’s a conversation worth having as we navigate this exciting new frontier.

Post Comment