What Is A Neural Processing Unit Npu

What is a neural processing unit NPU – What is a neural processing unit (NPU)? This cutting-edge technology is revolutionizing computing, particularly in the realm of artificial intelligence. NPUs are specialized processors designed to handle the complex calculations required for tasks like deep learning and machine learning, offering significant performance advantages over traditional CPUs and GPUs. They’re quickly becoming essential components in a wide range of applications, from autonomous vehicles to mobile devices.

NPUs excel at handling the intricate mathematical operations inherent in neural networks. Their architecture is optimized for these types of computations, leading to remarkable speed and efficiency. This specialized approach contrasts sharply with the more general-purpose designs of CPUs and GPUs, resulting in superior performance in specific application areas. Understanding the nuances of NPU design and its practical applications is crucial to appreciating the advancements in modern computing.

Introduction to Neural Processing Units (NPUs)

Neural Processing Units (NPUs) are specialized hardware accelerators designed for performing complex computations involved in artificial intelligence (AI) and machine learning (ML) tasks. They excel at handling the computationally intensive operations required for training and deploying deep learning models. This specialization makes them a crucial component in modern computing architectures focused on AI applications.NPUs are fundamentally different from traditional processors like CPUs and GPUs, offering tailored architectures for neural network operations.

Their purpose is to drastically improve the speed and efficiency of AI workloads, enabling the development and deployment of more sophisticated AI models.

Defining a Neural Processing Unit (NPU)

A Neural Processing Unit (NPU) is a specialized electronic circuit designed for accelerating the computations required by artificial neural networks. Its architecture is optimized for the specific mathematical operations found in these networks, enabling significantly faster processing compared to general-purpose processors. These optimizations are crucial for handling the massive datasets and complex calculations inherent in modern AI tasks.

Distinguishing NPUs from CPUs and GPUs

NPUs differ significantly from CPUs and GPUs in their architecture and intended use. CPUs are general-purpose processors capable of handling a wide range of tasks, but their performance is less optimized for the specific operations within neural networks. GPUs, initially designed for graphics processing, have been adapted for parallel computations and are well-suited for certain AI tasks. NPUs, however, are specifically designed for neural network computations, offering significantly higher efficiency in these tasks.

Comparing CPUs, GPUs, and NPUs

The following table highlights the comparative strengths and weaknesses of CPUs, GPUs, and NPUs in handling various types of tasks.

| Task Category | CPU | GPU | NPU |

|---|---|---|---|

| General Computing | Strong | Moderate | Weak |

| Graphics Rendering | Weak | Strong | Very Weak |

| Neural Network Training | Very Weak | Moderate | Strong |

| Neural Network Inference | Very Weak | Moderate | Strong |

| Data Movement | Moderate | Moderate | Moderate |

| Power Consumption | Moderate | Moderate | Moderate to Low (compared to GPUs for training) |

This table demonstrates the varying strengths and weaknesses across these types of processors. Each type is better suited to specific types of tasks, and combining them in a system can provide optimal performance for a wide range of applications.

Architectures and Design of NPUs

Neural Processing Units (NPUs) are specialized hardware accelerators designed to efficiently execute the computationally intensive tasks involved in deep learning. Their architectures are meticulously crafted to optimize performance for specific types of computations, leading to significant speedups compared to general-purpose processors. This specialized design allows NPUs to handle the massive datasets and complex algorithms central to modern machine learning applications.The design of an NPU is a complex interplay of various architectural approaches.

These approaches are carefully chosen to maximize the speed and efficiency of the NPU for particular types of deep learning operations. Different NPU architectures are tailored to excel in specific tasks. For example, some NPUs might be better suited for convolutional neural networks (CNNs), while others may be optimized for recurrent neural networks (RNNs).

Different Architectural Approaches

Various architectural approaches are employed in designing NPUs. These include dataflow architectures, tensor cores, and specialized memory hierarchies. Dataflow architectures are designed to maximize throughput by efficiently managing the flow of data through the processing pipeline. Tensor cores, a common component in many NPUs, are dedicated units for performing matrix multiplications and other tensor operations, critical in deep learning models.

Specialized memory hierarchies are designed to minimize memory access latency, a major bottleneck in many deep learning applications.

Hardware Components and Interconnections

NPUs consist of several interconnected hardware components, each playing a crucial role in the overall processing. Key components include processing elements (PEs), which perform the actual computations; memory units, which store data and instructions; and interconnect networks, which facilitate communication between different components. The interconnect network is critical for high throughput; efficient communication between PEs and memory is essential to prevent bottlenecks.

These components are interconnected to optimize data flow, maximizing parallelism and minimizing latency.

Optimization for Deep Learning Operations

NPUs are designed to excel at specific types of computations prevalent in deep learning. For instance, they often feature specialized instructions for matrix multiplications, convolutions, and other fundamental deep learning operations. The architectures are tailored to exploit inherent parallelism within these operations. Hardware-level optimization techniques are employed to reduce the latency associated with data movement and computation.

This optimization significantly speeds up the execution of deep learning models.

Example NPU Architectures and Use Cases

| NPU Architecture | Key Features | Use Cases |

|---|---|---|

| Dataflow-based NPU | Optimized for high throughput, pipelined execution. | Large-scale deep learning training, image recognition, natural language processing. |

| Tensor Core-based NPU | Specialized hardware for matrix and tensor operations. | Deep learning inference, high-performance CNNs, RNNs. |

| Custom NPU for specific tasks | Tailored architecture for a specific application. | Specific workloads, high-performance applications in scientific computing or financial modelling. |

The table above highlights some of the common NPU architectures and their suitability for different use cases. Choosing the right architecture depends on the specific deep learning task and the desired performance characteristics.

Applications and Use Cases of NPUs: What Is A Neural Processing Unit NPU

Source: ersaelectronics.com

Neural Processing Units (NPUs) are specialized hardware accelerators designed to perform complex computations required for artificial intelligence (AI) and machine learning (ML) tasks. Their architecture is optimized for the specific mathematical operations prevalent in these fields, leading to significant performance gains and energy efficiency improvements compared to general-purpose processors. This specialized design makes NPUs ideal for a variety of applications.NPUs excel in applications requiring high-throughput processing of large datasets and complex algorithms.

Their optimized architecture enables faster inference and training of AI models, accelerating the development and deployment of sophisticated AI systems. This speed and efficiency are particularly valuable in real-time applications where responsiveness is critical.

AI and Machine Learning Applications

NPUs are becoming increasingly important in AI and machine learning applications due to their ability to accelerate model training and inference. They are highly effective for tasks like image recognition, natural language processing, and speech synthesis, enabling faster processing of large datasets and more complex models. The specialized instructions and hardware support for deep learning algorithms in NPUs allow for faster and more efficient execution of these algorithms, leading to improved performance in applications like object detection and image classification.

Edge Device Integration

NPUs are playing a crucial role in accelerating the development of intelligent edge devices. These devices, such as smartphones, IoT sensors, and autonomous vehicles, require low-latency and energy-efficient computation. NPUs, with their optimized architectures, are well-suited for these needs, enabling real-time processing and decision-making at the edge. This allows for faster response times and reduced reliance on cloud infrastructure, especially in situations with limited or unreliable connectivity.

Examples of Industries Leveraging NPUs

Several industries are actively adopting NPUs to enhance their operations. Autonomous vehicles, for instance, rely on NPUs for real-time processing of sensor data, enabling crucial decision-making for navigation and object detection. Mobile devices leverage NPUs to improve performance in mobile applications such as image processing, augmented reality, and personalized recommendations. The Internet of Things (IoT) is another area where NPUs are proving valuable for tasks like processing sensor data and enabling smart home features.

Application Domains and NPU Advantages

| Application Domain | NPU Advantages |

|---|---|

| Autonomous Vehicles | Real-time processing of sensor data, enabling crucial decision-making for navigation and object detection. |

| Mobile Devices | Improved performance in mobile applications such as image processing, augmented reality, and personalized recommendations. |

| IoT Devices | Efficient processing of sensor data, enabling smart home features and real-time data analysis. |

| Image Recognition and Classification | Faster processing of large image datasets and more complex models, leading to improved performance. |

| Natural Language Processing | Accelerated processing of large text datasets and complex language models. |

NPU Performance and Efficiency

Source: aliexpress-media.com

Neural Processing Units (NPUs) are increasingly crucial for accelerating deep learning tasks. Their specialized architectures are designed to optimize performance and energy efficiency, differentiating them from CPUs and GPUs in specific use cases. This section delves into the key performance metrics and efficiency considerations that characterize NPUs.Evaluating NPU performance and efficiency is complex, encompassing metrics that go beyond raw processing speed.

Considerations like energy consumption, latency, and throughput are critical to understanding the overall effectiveness of an NPU in a given application.

Performance Metrics for NPUs

Various metrics are employed to assess the performance of NPUs. These metrics capture different aspects of performance, including the speed and efficiency of operations. Key metrics include:

- Throughput: The rate at which an NPU can process data. Measured in operations per second (OPS), this metric indicates the raw processing power. High throughput is desirable for tasks requiring large volumes of data.

- Latency: The time taken to process a single piece of data. Lower latency is critical for real-time applications. A trade-off often exists between throughput and latency.

- Energy Efficiency: The ratio of performance to energy consumption. Crucial for battery-powered devices and resource-constrained environments. An NPU with high energy efficiency can achieve comparable performance with significantly lower power consumption.

- Accuracy: The correctness of the results produced by the NPU. In deep learning applications, accuracy is a paramount concern and is often measured using standard metrics like precision and recall.

Energy Efficiency Comparison

NPUs are often designed with energy efficiency in mind. Their specialized architectures differ from CPUs and GPUs, leading to varying energy consumption profiles for similar tasks. In many instances, NPUs exhibit significantly lower energy consumption than CPUs or GPUs for deep learning workloads.

- CPUs: Generally less energy-efficient than NPUs for deep learning tasks. Their general-purpose nature makes them less optimized for the specific operations found in these algorithms.

- GPUs: Often more energy-efficient than CPUs for general-purpose computations, but NPUs can surpass them in energy efficiency when handling deep learning workloads. The specialized architectures of NPUs are better suited to the specific operations of deep learning, often leading to reduced energy consumption compared to GPUs.

Computational Speed in Deep Learning

NPUs excel at accelerating deep learning tasks. Their architectures are tailored to the specific computations required for these algorithms, leading to significant performance gains.

- Matrix Multiplication: NPUs demonstrate substantial speed improvements over CPUs and GPUs in matrix multiplication, a fundamental operation in deep learning.

- Convolutional Operations: Convolutional operations are critical in convolutional neural networks. NPUs typically achieve faster processing speeds than CPUs and GPUs for these operations.

Factors Influencing NPU Energy Efficiency, What is a neural processing unit NPU

Several factors contribute to the energy efficiency of NPUs. These factors influence the overall design and implementation of the hardware.

- Specialized Hardware: NPUs employ specialized hardware units, optimized for the specific operations needed in deep learning, leading to lower power consumption.

- Low-Precision Arithmetic: Using lower-precision floating-point arithmetic (e.g., FP16) reduces the computational load and energy consumption, without significant accuracy loss in many deep learning models.

- Efficient Memory Access: Optimized memory access mechanisms reduce the time spent on memory operations, leading to lower energy consumption.

Benchmarking NPU Performance

Comparing the performance and energy consumption of various NPU models is essential. The following table presents a simplified comparison:

| NPU Model | Energy Consumption (mW) | Performance (OPS) |

|---|---|---|

| NPU-A | 100 | 10^9 |

| NPU-B | 150 | 1.5 – 10^9 |

| NPU-C | 80 | 8 – 10^9 |

Note: This table is for illustrative purposes only and does not represent actual NPU models. Actual values will vary depending on the specific architecture, workload, and implementation details.

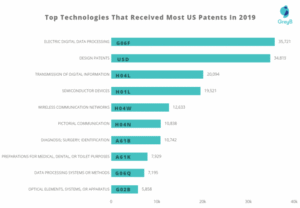

Future Trends and Developments in NPUs

Neural Processing Units (NPUs) are rapidly evolving, driven by the increasing demand for specialized hardware capable of handling the computational demands of artificial intelligence (AI) and machine learning (ML). This evolution promises significant advancements in various fields, impacting how we approach problem-solving and data analysis.

Future Advancements and Research Directions

Ongoing research focuses on enhancing NPU efficiency and versatility. This includes exploring novel architectures that better optimize power consumption and throughput, particularly for complex AI models. Researchers are also investigating new memory architectures tailored to the specific needs of NPUs, aiming to reduce latency and increase bandwidth. Advanced techniques for hardware acceleration of specific AI tasks, like image recognition or natural language processing, are also being actively pursued.

Potential Impact on Healthcare and Scientific Research

NPUs hold immense potential to revolutionize healthcare and scientific research. In healthcare, faster and more accurate diagnostics are achievable through the acceleration of medical image analysis and pattern recognition tasks. The ability to process massive datasets in scientific research can unlock deeper insights into complex biological processes and potentially lead to breakthroughs in drug discovery and personalized medicine.

These advancements will depend on the continued development of specialized NPUs designed for the specific demands of these fields.

Emerging Trends in NPU Architectures

The evolution of NPU architectures is marked by a shift towards more specialized designs. This trend involves the development of architectures that cater to particular AI algorithms and models. For instance, architectures optimized for deep learning, particularly for tasks requiring high-volume data processing, are becoming increasingly prevalent. Furthermore, architectures focusing on specific use cases, like edge computing, are gaining importance, facilitating localized AI processing.

Future Applications and Use Cases

The applications of NPUs are expanding beyond traditional computing domains. We can anticipate increased adoption in autonomous vehicles, where NPUs are crucial for real-time decision-making and sensor data processing. Furthermore, NPUs are likely to become integral to smart cities, enabling efficient management of traffic, energy, and security systems. These are just a few examples; the potential for NPUs to reshape various industries is vast.

Predicted Advancements and Potential Impacts

| Predicted Advancement | Potential Impact |

|---|---|

| Increased energy efficiency in NPU design | Reduced power consumption, enabling deployment in resource-constrained environments (e.g., mobile devices, IoT). |

| Development of specialized NPUs for specific AI tasks | Improved performance and reduced latency in targeted applications (e.g., image recognition in medical imaging). |

| Integration of NPUs with neuromorphic computing | Enabling more biologically inspired AI systems with potentially higher levels of adaptability and efficiency. |

| Enhanced NPU-CPU collaboration | Improved overall system performance by leveraging the strengths of both CPU and NPU, leading to more robust and efficient computing systems. |

NPU vs. Other Processing Units (CPU & GPU)

Neural Processing Units (NPUs) are emerging as a distinct class of processing units, designed specifically for accelerating the computationally intensive tasks inherent in artificial intelligence and machine learning. Understanding their strengths and weaknesses relative to Central Processing Units (CPUs) and Graphics Processing Units (GPUs) is crucial for optimizing performance and selecting the appropriate hardware for specific applications.NPUs excel in accelerating the execution of neural network algorithms.

Their specialized architectures, tailored for matrix operations and other common deep learning computations, often result in significantly higher performance and efficiency compared to general-purpose processors like CPUs and GPUs, especially for AI workloads. This allows for faster training of complex models and real-time inference.

Comparison of Functionalities

NPUs are fundamentally different from CPUs and GPUs in their core design. CPUs are general-purpose processors, adept at handling a broad range of tasks, including operating system management and application execution. GPUs, initially designed for graphics rendering, have evolved to excel at parallel computations, proving highly effective for certain types of data-intensive tasks. NPUs, on the other hand, are specifically optimized for the unique mathematical operations required by neural networks.

Different Workloads and Processing Unit Strengths

CPUs are generally well-suited for tasks requiring a diverse range of operations, including complex logic, control flow, and managing system resources. Their strengths lie in their versatility and wide applicability. GPUs, with their massive parallel processing capabilities, are ideal for tasks involving substantial data parallelism, such as image processing and scientific simulations. NPUs, specifically designed for neural network computations, provide the highest performance and efficiency for training and inference of deep learning models.

Strengths and Weaknesses in Handling Computational Tasks

- CPUs: CPUs are strong at handling a wide variety of tasks, but their performance in matrix operations and other intensive calculations is generally slower than specialized processors. Their strength lies in general-purpose computing, not AI-specific operations.

- GPUs: GPUs are remarkably effective at handling parallel computations, making them powerful for certain AI tasks. However, they may not be as efficient as NPUs for specific neural network operations, and their performance can vary depending on the specific algorithm.

- NPUs: NPUs are designed specifically for neural network operations. They excel at accelerating the computation-intensive tasks of training and inference, providing significant performance gains over CPUs and GPUs for AI applications.

Comparative Table of Characteristics and Capabilities

| Characteristic | CPU | GPU | NPU |

|---|---|---|---|

| Architecture | General-purpose, complex instruction set | Parallel processing, SIMD (Single Instruction, Multiple Data) | Specialized for neural network operations, optimized for matrix computations |

| Performance for Neural Networks | Low | Moderate to High (depending on algorithm) | High |

| Efficiency for Neural Networks | Low | Moderate to High (depending on algorithm) | High |

| Power Consumption | Moderate | High | Variable, generally lower than GPUs for comparable tasks |

| Programming Model | High-level languages | CUDA, OpenCL | AI-specific frameworks and libraries |

| Applications | General-purpose computing, system software | Graphics rendering, scientific simulations, AI (certain types) | AI-specific tasks, machine learning, deep learning |

Deep Dive into NPU Functionality

Neural Processing Units (NPUs) are specialized hardware accelerators designed for efficiently executing the computationally intensive tasks of artificial intelligence (AI) and machine learning (ML). Their unique architecture allows for optimized performance in specific AI operations, distinguishing them from general-purpose processors like CPUs and GPUs. This section delves into the inner workings of NPUs, examining the fundamental algorithms, key components, and operational details.

Core Architectural Components

NPUs employ a highly specialized architecture, often incorporating custom instructions and data paths. Understanding these components is key to grasping their exceptional performance in AI tasks.

- Specialized Arithmetic Logic Units (ALUs): NPUs often feature dedicated ALUs optimized for operations like matrix multiplication, convolution, and activation functions, critical in neural network computations. These specialized ALUs are crucial for handling the massive amounts of data and complex operations inherent in AI algorithms.

- Memory Hierarchy: A hierarchical memory system, including high-speed caches and specialized memory banks, is essential for minimizing data access latency. This optimized memory hierarchy is crucial for efficient data retrieval and storage during intensive AI operations. Efficient memory management is key for preventing bottlenecks that hinder performance.

- Interconnect Network: Efficient data transfer between different parts of the NPU is paramount. The interconnect network, often a custom-designed structure, facilitates rapid data movement between ALUs, memory units, and other components.

- Control Unit: The control unit manages the execution of instructions, coordinating the various components and ensuring proper data flow. It orchestrates the interactions between different parts of the NPU to complete complex AI tasks.

Underlying Algorithms and Operations

NPUs are designed to accelerate specific types of computations that form the core of machine learning algorithms. These algorithms leverage mathematical operations crucial to training and running neural networks.

- Matrix Multiplication: This operation is fundamental to many neural network layers. NPUs employ specialized hardware and algorithms to significantly speed up matrix multiplication, enabling rapid processing of large datasets.

- Convolutional Operations: In convolutional neural networks (CNNs), NPUs are optimized to efficiently perform convolutional operations. These operations are critical for image and video processing in AI applications.

- Activation Functions: Activation functions introduce non-linearity to neural networks, enabling the network to learn complex patterns. NPUs are optimized for these functions to facilitate the learning process.

- Specialized Instructions: NPUs frequently feature specialized instructions to accelerate common operations within neural networks. This tailored instruction set contributes significantly to overall performance.

Detailed Analysis of Matrix Multiplication

Matrix multiplication is a cornerstone operation in numerous AI tasks. NPUs excel at performing this operation efficiently due to their specialized hardware and optimized algorithms.

| Operation | Description |

|---|---|

| Matrix Multiplication | NPUs utilize highly optimized algorithms, leveraging parallel processing capabilities, to perform matrix multiplication orders of magnitude faster than general-purpose processors. This optimization is achieved through specialized hardware and software to reduce the execution time. |

“NPUs are designed to exploit the inherent parallelism in matrix operations, maximizing performance and minimizing latency in AI computations.”

Last Word

In summary, NPUs represent a significant leap forward in specialized processing, particularly in handling AI tasks. Their optimized architectures and energy efficiency make them ideal for a variety of applications, from mobile devices to complex scientific simulations. While still evolving, NPUs are poised to play a pivotal role in shaping the future of computing and its applications.

Post Comment