History Of Computers From Abacus To Ai

History of computers from abacus to AI traces the remarkable journey of computing technology, from its humble beginnings with the abacus to the sophisticated artificial intelligence systems of today. This exploration unveils the pivotal moments, key inventors, and transformative technologies that shaped the digital world.

The narrative encompasses the evolution of calculating devices, the rise of electronic computers, the software revolution, the digital age, and the burgeoning field of AI. It also examines the impact of computers on society, highlighting both the positive and negative effects of these technological advancements.

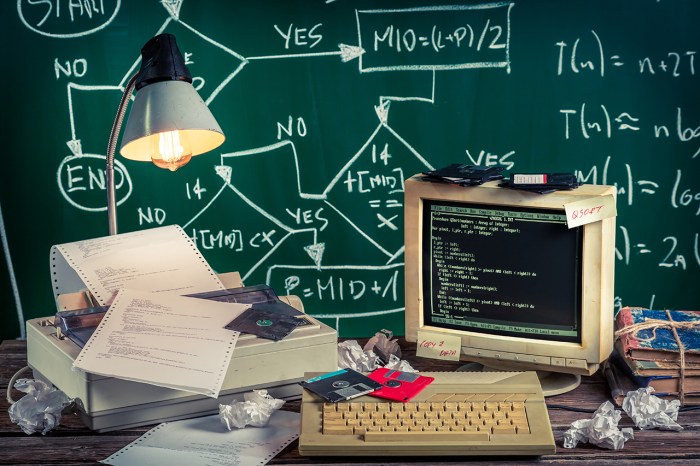

Early Calculating Devices

Source: com.au

The quest for easier and more efficient calculation has driven human innovation throughout history. From rudimentary counting tools to sophisticated mechanical marvels, the evolution of calculating devices reflects a continuous push towards automation and precision. This journey laid the foundation for the digital age and continues to inspire advancements in computation today.

Evolution of Calculating Tools

Early humans relied on simple counting methods, often using their fingers or physical objects. The abacus, a significant step forward, emerged as a portable and versatile tool for performing arithmetic operations. Its beads sliding along rods facilitated addition, subtraction, multiplication, and division, enabling complex calculations with relative ease. This widespread adoption across cultures demonstrates its practical value and enduring influence on calculation methods.

Abacus

The abacus, appearing in various forms across different civilizations, was a revolutionary calculating tool for its time. Its key features include rows of beads strung on rods, each row representing a different place value. Using the beads, users could perform addition, subtraction, multiplication, and division. The abacus’s portability and ease of use made it highly accessible, crucial for merchants, traders, and scholars in ancient civilizations.

The simplicity of its design allowed for quick and accurate calculations, especially in the absence of more advanced tools.

Napier’s Bones

John Napier’s invention of Napier’s bones in the 17th century was a significant leap forward in computational tools. These were small rods, each marked with the multiplication tables of a number, enabling efficient multiplication and division. The concept of logarithms was central to their function, offering a way to convert complex calculations into simpler ones. This ingenious device, though somewhat cumbersome, was an important precursor to mechanical calculators.

Mechanical Calculators

The 17th and 18th centuries witnessed the development of mechanical calculators, marking a pivotal transition in calculating tools. These devices, often powered by hand cranks, utilized gears and levers to perform arithmetic operations. The Pascaline, invented by Blaise Pascal, and the Stepped Reckoner, by Gottfried Wilhelm Leibniz, exemplify these early mechanical calculators. These machines offered greater accuracy and speed than their predecessors.

Key functionalities included addition, subtraction, multiplication, and division, and they were a major advancement over the abacus and Napier’s bones, albeit often complex to operate.

Comparison of Early Calculating Devices

| Device Name | Year Invented | Key Features | Applications |

|---|---|---|---|

| Abacus | Unknown (Ancient times) | Rows of beads, place value system | Trade, commerce, accounting |

| Napier’s Bones | 1617 | Rods with multiplication tables, logarithms | Multiplication and division |

| Pascaline | 1642 | Mechanical gears for arithmetic | Basic calculations |

| Stepped Reckoner | 1673 | Improved mechanical design, multiplication and division | Advanced calculations |

Early calculating devices, from the abacus to mechanical calculators, demonstrate a progression in efficiency and complexity. While the abacus remained a fundamental tool for centuries, Napier’s bones, the Pascaline, and the Stepped Reckoner represented advancements in automation and computational capabilities. These early tools laid the groundwork for future developments in computing technology.

The Rise of Electronic Computing

The transition from mechanical calculating devices to electronic computers marked a significant leap in computing capabilities. This shift was driven by advancements in electronics and a growing need for faster and more versatile computation. Early electronic computers, while rudimentary by today’s standards, laid the groundwork for the sophisticated systems we rely on now.The development of electronic computers was not a sudden event but rather a gradual process, building upon the theoretical and practical foundations laid by previous inventors.

Key innovations in vacuum tube technology, logic circuits, and data storage played crucial roles in making electronic computation possible. This era saw the emergence of machines capable of performing complex calculations far beyond the reach of their mechanical predecessors.

Pivotal Inventors and Their Contributions

Numerous individuals played pivotal roles in the development of electronic computers. Their ingenuity and dedication paved the way for the modern computing age. Alan Turing, for instance, with his theoretical work on computability, provided a crucial framework for understanding the capabilities of these machines. Similarly, John von Neumann’s conceptualization of the stored-program computer architecture remains a cornerstone of modern computer design.

Technological Advancements

Several technological advancements enabled the transition to electronic computing. The invention of the vacuum tube, a crucial component in early electronic circuits, allowed for the amplification and control of electrical signals. The development of logic gates, which could perform basic logical operations, was essential for building complex computing circuits. Improvements in data storage, including magnetic drums and later magnetic tapes, allowed for the efficient storage and retrieval of information.

Types of Early Electronic Computers

Early electronic computers varied significantly in their design and purpose. Some, like the ENIAC, were general-purpose machines designed to tackle a wide range of computational tasks. Others, like Colossus, were specialized machines designed for specific purposes, such as breaking codes during World War II. These machines demonstrated the versatility and potential of electronic computation, although their size and complexity were considerable.

Comparison of Electronic Computer Generations

| Generation | Key Characteristics | Impact |

|---|---|---|

| First Generation (1940s-1950s) | Used vacuum tubes, were large and power-hungry, programmed using machine language. | Marked the beginning of electronic computing, demonstrating the potential of these machines for complex calculations. |

| Second Generation (1950s-1960s) | Used transistors, smaller and more efficient than vacuum tubes, assembly language programming. | Led to a significant reduction in size and power consumption, enabling more widespread use of computers. |

| Third Generation (1960s-1970s) | Used integrated circuits (ICs), further miniaturization, higher performance, operating systems emerged. | Enabled even more powerful and versatile computers, opening doors to applications in diverse fields like business and scientific research. |

The Software Revolution

The transition from rudimentary hardware to powerful computing machines was fundamentally shaped by the development of software. This evolution enabled computers to transcend their initial limitations and become versatile tools capable of performing complex tasks. Programming languages, operating systems, and software applications became crucial elements in realizing the full potential of computers.The emergence of software marked a pivotal moment in computer history, transforming computers from specialized machines to adaptable tools capable of diverse applications.

This revolution was driven by the creation of programming languages, which allowed humans to communicate instructions to computers in a more accessible manner, and the development of operating systems that managed computer resources efficiently. This evolution significantly impacted computer development, allowing for more complex programs and broader applications.

Programming Languages

Programming languages emerged as crucial tools for instructing computers. Their development allowed for more complex instructions and a wider range of applications. Early languages, often machine-specific, were cumbersome to use. Higher-level languages, like FORTRAN and COBOL, abstracted away much of the hardware detail, leading to greater programmer productivity.The rise of languages like C, C++, and Java introduced structured programming paradigms, significantly enhancing code organization and maintainability.

Object-oriented programming, exemplified by languages like Java and Python, further improved code modularity and reusability. This evolution led to more efficient and robust software development.

Operating Systems

Operating systems are the fundamental software that manages computer resources, including hardware and software interactions. Early operating systems were basic, often managing a single task at a time. The development of multitasking and multi-user operating systems allowed for simultaneous execution of multiple programs and user interactions, significantly enhancing computer efficiency.The evolution of operating systems mirrors the advancements in hardware.

Modern operating systems, such as Windows, macOS, and Linux, manage complex interactions between hardware components and software applications. Their sophisticated resource management and user interfaces have made computers more user-friendly and powerful.

Significant Software Milestones

- 1957: FORTRAN, one of the earliest high-level programming languages, was developed. This marked a significant step towards simplifying the process of writing computer programs.

- 1969: The Unix operating system was created, which significantly influenced the development of subsequent operating systems. Its modular design and portability have been instrumental in the evolution of modern systems.

- 1970s: The development of structured programming techniques further enhanced code organization and maintainability. Languages like Pascal and C emerged as pivotal tools in this era.

- 1980s: The rise of personal computers led to the development of user-friendly operating systems like MS-DOS and early versions of Macintosh OS. These systems made computers more accessible to a wider audience.

- 1990s: The internet’s explosive growth spurred the development of web-based applications, leading to the rise of languages like JavaScript and the expansion of graphical user interfaces (GUIs).

- 2000s and beyond: The rise of mobile computing and cloud computing prompted the development of languages and platforms suited to these environments, like Swift and various cloud-based programming languages.

Programming Language Comparison

| Language | Strengths | Weaknesses |

|---|---|---|

| Python | Easy to learn, versatile, extensive libraries, strong community support | Can be slower than compiled languages in some cases, not as suitable for highly performance-critical applications |

| Java | Platform independent, robust, widely used in enterprise applications, extensive libraries | Can be verbose compared to some scripting languages, can have overhead in some scenarios |

| C++ | High performance, low-level control, extensive use in system programming | Steep learning curve, prone to errors if not managed carefully, complex memory management |

| C# | Object-oriented, strong typing, excellent for building applications on the .NET framework, good tooling support | Limited to .NET ecosystem, less widespread than other languages |

The Digital Age and the Internet

The advent of the internet fundamentally reshaped the landscape of computing, accelerating its development and dramatically altering societal interactions. This era witnessed a paradigm shift from isolated computing systems to interconnected networks, enabling unprecedented global communication and information access. The integration of personal computers and networking technologies transformed the way people worked, learned, and interacted, leading to the digital revolution we experience today.The internet, built upon a foundation of key technologies, facilitated this profound transformation.

Its influence on computer development was profound, driving innovation in hardware and software design. The ability to share information and collaborate across vast distances fostered an environment ripe for technological advancement. This era also saw a significant evolution in the way people interacted with computers, leading to a more accessible and user-friendly experience.

Influence on Computer Development and Usage

The internet acted as a powerful catalyst for computer development. The need for faster processing, more efficient networking, and enhanced storage capabilities was driven by the demands of online applications and communication. This demand fueled innovation in hardware and software design, leading to the creation of more powerful and versatile computing systems. The rapid expansion of internet usage also stimulated the development of user-friendly interfaces and software applications, making computers more accessible and user-friendly for a wider range of users.

Transformation of Society

Personal computers and networking technologies profoundly transformed society. The ability to access information globally and communicate with others instantaneously fostered new forms of collaboration and knowledge sharing. Businesses benefited from increased efficiency and global reach, while individuals gained access to a vast pool of knowledge and resources. This connectivity led to the emergence of new social structures and the blurring of geographical boundaries, creating a more interconnected and globally aware world.

Key Technologies Fueling Internet Growth

Several crucial technologies were instrumental in the expansion and success of the internet. Packet switching, enabling efficient data transmission over networks, was a fundamental building block. The development of standardized protocols, like TCP/IP, facilitated seamless communication between different computer systems. The World Wide Web, with its intuitive hypertext system, revolutionized information access and presentation. These technologies, working in concert, enabled the widespread adoption and growth of the internet.

Growth of Internet Users Over Time

The rapid growth of internet users is a testament to its pervasive influence. The following table demonstrates the increasing adoption of the internet from its early stages to the present. Note that precise figures can vary based on the measurement methodology and data source.

| Year | Estimated Number of Internet Users (in millions) |

|---|---|

| 1995 | 25 |

| 2000 | 350 |

| 2005 | 1000 |

| 2010 | 2000 |

| 2015 | 3000 |

| 2020 | 4500 |

| 2023 | 5000+ |

Artificial Intelligence

Source: shutterstock.com

Artificial intelligence (AI) represents a significant leap in computing, aiming to imbue machines with human-like cognitive abilities. From simple pattern recognition to complex problem-solving, AI strives to replicate the human thought process. Its roots lie in the ambitious quest to create machines capable of understanding, learning, and acting autonomously. This pursuit has evolved through various stages, each marked by advancements in computational power and theoretical understanding.

Early Concepts and Inspirations

Early AI research drew inspiration from various fields. Philosophers pondered the nature of consciousness and the potential for creating artificial minds. Neurologists explored the structure and function of the human brain, seeking to understand the mechanisms of thought. Early computational pioneers envisioned machines capable of performing complex tasks that traditionally required human intelligence. These diverse perspectives laid the groundwork for the development of early AI concepts, including the Turing test, a crucial benchmark for evaluating machine intelligence.

Key Milestones in AI Algorithm Development

The development of AI algorithms has been marked by several key milestones. The development of rule-based systems, early expert systems, and the introduction of machine learning algorithms, such as decision trees and Bayesian networks, were crucial steps in this progression. Neural networks, inspired by the structure of the human brain, emerged as a powerful tool for pattern recognition and data analysis.

The evolution of deep learning, a subset of machine learning, further revolutionized AI, enabling the processing of vast amounts of data and achieving remarkable results in various applications.

Impact of AI on Modern Life

AI’s impact on modern life is profound and multifaceted. From personalized recommendations on streaming services to medical diagnoses and self-driving cars, AI is rapidly transforming industries and everyday tasks. It enhances efficiency, improves decision-making, and creates new opportunities for innovation. The increasing availability of powerful computing resources, vast datasets, and sophisticated algorithms is driving the rapid growth of AI applications.

This has resulted in notable improvements in productivity, automation, and problem-solving across many sectors.

Types of AI and Potential Applications

| Type of AI | Potential Applications |

|---|---|

| Rule-based Systems | Expert systems in specific domains (e.g., medical diagnosis, financial analysis), automated decision-making in controlled environments. |

| Machine Learning | Personalized recommendations (e.g., online shopping, entertainment), spam filtering, fraud detection, predictive maintenance. |

| Deep Learning | Image recognition (e.g., object detection, facial recognition), natural language processing (e.g., chatbots, language translation), autonomous vehicles. |

| Natural Language Processing (NLP) | Machine translation, chatbots, sentiment analysis, text summarization. |

| Computer Vision | Image recognition, object detection, facial recognition, medical image analysis. |

The Future of Computing

The evolution of computing has been nothing short of remarkable, progressing from rudimentary mechanical aids to the sophisticated AI systems we see today. This trajectory suggests a continued rapid advancement, promising even more transformative technologies in the years ahead. The future of computing hinges on several key emerging trends, with quantum computing poised to revolutionize processing power and specialized hardware pushing the boundaries of computational capabilities.The future of computing isn’t merely about faster speeds and greater storage; it’s about fundamentally changing how we interact with information and solve complex problems.

This shift will impact various sectors, from healthcare and scientific research to entertainment and everyday life. The potential applications are vast and exciting, and the ongoing research and development in this field are paving the way for a future where computing capabilities exceed our current imaginations.

Emerging Trends Shaping the Future

Several significant trends are reshaping the computing landscape. These include the increasing integration of computing into everyday devices, the development of specialized hardware tailored for specific tasks, and the ongoing pursuit of greater efficiency and power.

- Internet of Things (IoT) Expansion: The proliferation of interconnected devices continues, with smart homes, wearables, and industrial sensors generating vast amounts of data. This data deluge demands advanced computing capabilities for processing, analysis, and security. Smart cities, for instance, rely on interconnected sensors and actuators to manage traffic flow, optimize energy consumption, and enhance public safety.

- Specialized Hardware Development: Hardware tailored for specific tasks, such as AI training or scientific simulations, is gaining prominence. This specialized hardware, like GPUs and FPGAs, significantly accelerates processing speeds for particular applications, leading to breakthroughs in fields like artificial intelligence and materials science. Examples include specialized chips designed for machine learning tasks, offering unparalleled efficiency for tasks like image recognition and natural language processing.

- Quantum Computing Advancements: Quantum computers leverage the principles of quantum mechanics to perform calculations beyond the capabilities of classical computers. While still in their nascent stages, quantum computers promise to revolutionize fields like drug discovery, materials science, and cryptography. Companies like IBM and Google are actively developing and deploying quantum computers, though their practical applications are still being explored.

Predicted Future Developments in Computing

Predicting the precise trajectory of future computing advancements is inherently challenging. However, based on current trends and research, several potential developments can be anticipated.

| Category | Development | Impact |

|---|---|---|

| Processing Power | Increased efficiency of specialized hardware, emergence of quantum computing | Faster and more powerful computations, enabling complex simulations and breakthroughs in scientific discovery. |

| Data Management | Advanced data storage and retrieval systems, improved data security protocols | Efficient handling of vast datasets, ensuring data privacy and confidentiality in the face of increased digital information. |

| User Interaction | Intuitive interfaces and personalized experiences | Enhanced user engagement and more seamless interactions with computing systems, leading to improved user satisfaction and productivity. |

| Applications | New applications across diverse sectors (healthcare, finance, manufacturing) | Transformative impact across various industries, leading to improved efficiency, productivity, and innovation. |

Impact on Society

Computers have profoundly reshaped modern society, impacting nearly every aspect of daily life. From communication and commerce to education and entertainment, the pervasive influence of computing is undeniable. This transformative power, however, comes with both positive and negative consequences, necessitating careful consideration of the social implications of these advancements. The digital revolution has created unprecedented opportunities, but also new challenges that require careful management and ethical considerations.The pervasive presence of computers has altered the way we live, work, and interact with each other.

This influence spans from the mundane, like online shopping and banking, to the complex, such as scientific research and medical diagnoses. However, this integration is not without its drawbacks. Digital divides, algorithmic biases, and the potential for misuse of data are just a few of the concerns that need addressing in the context of a rapidly evolving digital landscape.

Diverse Applications Across Industries

Computers have become indispensable tools across a vast spectrum of industries, transforming operations and driving innovation. Their application extends beyond the traditional office environment, permeating every sector imaginable. The table below illustrates the diverse uses of computers in various industries, showcasing their versatility and transformative power.

| Industry | Application |

|---|---|

| Healthcare | Electronic health records (EHRs), diagnostic tools, robotic surgery, drug discovery |

| Finance | Automated trading, fraud detection, risk management, customer service |

| Manufacturing | Computer-aided design (CAD), computer-aided manufacturing (CAM), robotics, inventory management |

| Retail | E-commerce platforms, inventory management systems, customer relationship management (CRM), point-of-sale systems |

| Education | Online learning platforms, educational software, virtual reality simulations, personalized learning experiences |

| Transportation | GPS navigation systems, traffic management systems, autonomous vehicles, logistics optimization |

| Agriculture | Precision farming techniques, automated irrigation systems, data analysis for crop yields |

| Entertainment | Video games, digital music, streaming services, animation, movie production |

Positive Impacts on Communities

The integration of computer technology has undeniably brought numerous benefits to various communities. These benefits are not limited to specific geographic locations or demographics; instead, they are widespread and impact diverse groups in meaningful ways.

- Increased Access to Information: The internet and readily available digital resources have democratized information access, allowing individuals from all walks of life to learn, connect, and participate in a globalized society. Libraries and educational institutions are utilizing digital resources to reach a wider audience.

- Enhanced Communication and Collaboration: Instantaneous communication via email, messaging apps, and video conferencing tools has fostered global collaboration, facilitated international business, and strengthened personal relationships. This has been especially crucial during times of crisis and distance.

- Economic Growth and Opportunity: The rise of e-commerce, online businesses, and remote work options has created new economic opportunities and fostered entrepreneurship, particularly for marginalized communities.

Negative Impacts and Social Implications

While computers have revolutionized society, they also present significant challenges and social implications. The digital divide, for instance, creates disparities in access to technology and opportunities.

- Digital Divide: Unequal access to technology and internet connectivity exacerbates existing societal inequalities, potentially widening the gap between the privileged and the disadvantaged. Bridging this gap through targeted initiatives is crucial.

- Privacy Concerns: The collection and use of personal data raise significant privacy concerns, demanding robust data protection measures and ethical guidelines for data handling.

- Job Displacement: Automation driven by computer technology has led to job displacement in certain sectors, prompting the need for retraining and adaptation to new employment opportunities.

- Algorithmic Bias: Algorithms used in various applications, from loan applications to hiring processes, can perpetuate existing biases, leading to unfair or discriminatory outcomes.

Illustrative Examples

Tracing the evolution of computing reveals a fascinating journey, from rudimentary calculating tools to sophisticated artificial intelligence systems. This section delves into specific examples of influential computers, highlighting their key features and the impact they had on various industries. The development of these machines wasn’t solely the result of technological advancements but also depended heavily on the vision and contributions of key figures.

Landmark Computers and Their Impact, History of computers from abacus to AI

This section presents a chronological overview of pivotal computers, showcasing their contributions to different fields. Each entry provides insight into the computer’s design, functionality, and the wider societal impact it generated.

| Computer Name | Year | Key Features | Impact |

|---|---|---|---|

| ENIAC (Electronic Numerical Integrator and Computer) | 1946 | A groundbreaking general-purpose electronic digital computer. Its vacuum tube technology allowed for complex calculations. It occupied a large room and consumed significant power. | ENIAC’s ability to perform complex calculations rapidly revolutionized scientific research, enabling advancements in fields like ballistics and weather forecasting. It marked a crucial transition from mechanical to electronic computing. |

| UNIVAC I (Universal Automatic Computer I) | 1951 | The first commercially successful electronic digital computer. It was designed for business applications, including data processing and statistical analysis. Its punch card input/output was a significant advancement over earlier systems. | UNIVAC I’s introduction signified a shift from specialized military use to wider commercial applications. It helped businesses automate tasks, enabling improved efficiency and decision-making. |

| IBM System/360 | 1964 | A family of mainframe computers designed for compatibility across various models. This standardization facilitated the exchange of software and data, promoting greater efficiency. It utilized integrated circuits (ICs) which increased processing power and reduced size. | The System/360’s modular design and compatibility fostered a significant market for software development. It dramatically increased the adoption of computer technology across diverse industries, from finance to manufacturing. |

| Apple Macintosh | 1984 | A revolutionary personal computer known for its user-friendly graphical user interface (GUI). It introduced the mouse as a primary input device and paved the way for the modern desktop computer. | The Macintosh democratized computing, making it accessible to a broader user base. Its impact on personal computing and the development of software is undeniable, as it facilitated the growth of the software industry. |

| Cray-1 | 1976 | A supercomputer renowned for its high processing power. Its parallel processing architecture allowed it to handle extremely complex computations. | The Cray-1 spurred advancements in parallel computing, enabling breakthroughs in fields requiring intensive calculations, such as weather modeling and scientific simulations. |

Key Figures in Computer History

Several individuals played pivotal roles in shaping the course of computer development. Their ingenuity and dedication propelled the field forward.

- Ada Lovelace: A pioneering mathematician who is considered the first computer programmer. Her work on Charles Babbage’s Analytical Engine demonstrated the potential of computers to go beyond simple calculations. Her insights were largely overlooked for many years.

- Alan Turing: A pivotal figure in theoretical computer science and artificial intelligence. His work on Turing machines and the Turing test laid the groundwork for modern computing and artificial intelligence.

- Grace Hopper: A remarkable computer scientist and admiral known for her contributions to programming languages. She developed the compiler, a program that translates human-readable code into machine code, significantly improving the development process.

- Bill Gates and Steve Jobs: These entrepreneurs revolutionized personal computing with their respective companies, Microsoft and Apple. Their vision and leadership brought computing to the masses.

Impact on Industries

The impact of computer technology extends across numerous industries. From streamlining business processes to enhancing scientific discovery, the applications are extensive.

- Finance: Computer systems have automated trading, risk assessment, and financial reporting, leading to greater efficiency and reduced errors.

- Healthcare: Medical imaging, diagnostic tools, and electronic health records have transformed patient care and medical research.

- Manufacturing: Computer-aided design (CAD) and computer-aided manufacturing (CAM) have automated manufacturing processes, leading to increased productivity and quality.

- Entertainment: Computer graphics, video games, and digital music have revolutionized the entertainment industry, creating new forms of artistic expression and interactive experiences.

Summary: History Of Computers From Abacus To AI

Source: binarymove.com

In conclusion, the history of computers, from the abacus to AI, is a captivating story of innovation and progress. It demonstrates how human ingenuity has led to increasingly powerful and sophisticated tools that have fundamentally altered how we live, work, and interact. The journey continues, with exciting possibilities for the future of computing.

Post Comment