How To Choose The Right Gpu For Gaming And Ai In 2025

How to Choose the Right GPU for Gaming and AI in 2025. The world of gaming and AI is rapidly evolving, demanding powerful hardware to keep pace. Choosing the right GPU in 2025 is no longer a simple task. This guide will walk you through the intricacies of selecting a GPU optimized for both demanding gaming experiences and cutting-edge AI applications.

This comprehensive guide dives into the crucial factors for making an informed decision. From understanding the nuances of gaming and AI GPU architectures to analyzing performance metrics and budget considerations, we cover all the bases. We’ll also examine the future-proofing aspects of GPU selection, ensuring your investment remains relevant as technology advances. The guide concludes with insights into integrated vs.

dedicated graphics and power consumption, helping you make the perfect choice for your needs.

Introduction to GPU Selection for Gaming and AI in 2025

Choosing the right GPU in 2025 is more nuanced than ever, as gaming and AI applications continue to evolve rapidly. Gamers demand higher frame rates, enhanced visual fidelity, and support for cutting-edge technologies like ray tracing and DLSS. Simultaneously, AI enthusiasts are seeking GPUs capable of handling increasingly complex tasks, from training large language models to running sophisticated simulations.

This necessitates a careful consideration of both immediate performance needs and future adaptability. The future of both gaming and AI relies on the appropriate selection of GPUs.The key difference between GPUs optimized for gaming and AI lies in their architectural design. Gaming GPUs prioritize high clock speeds, large amounts of VRAM, and efficient rasterization for smooth frame rates and detailed visuals.

AI GPUs, on the other hand, emphasize tensor cores and specialized algorithms to accelerate deep learning tasks, often prioritizing memory bandwidth and floating-point operations. While some overlap exists, a dedicated gaming GPU may not be optimal for AI work, and vice-versa.To ensure optimal performance and future-proof your investment, careful consideration of both current and potential future demands is critical.

A GPU capable of handling today’s demands might not meet tomorrow’s requirements. Therefore, the choice of GPU must be carefully evaluated, weighing factors such as the latest technologies and emerging trends. It’s not just about raw power today, but about the potential to keep up with the pace of technological advancement.

GPU Types and Their Applications

Understanding the diverse types of GPUs is crucial for making an informed decision. Different architectures are optimized for different tasks, and recognizing these distinctions is key to selecting the right solution.

| GPU Type | Primary Application | Key Features | Example Use Cases |

|---|---|---|---|

| Dedicated Gaming GPUs | High-performance gaming experiences | High clock speeds, large VRAM, advanced rasterization | Running demanding games at high settings, ray tracing, DLSS |

| Dedicated AI GPUs | Accelerating AI tasks | Specialized tensor cores, high memory bandwidth, optimized for floating-point operations | Training large language models, running deep learning simulations, image recognition |

| Hybrid GPUs | Balancing gaming and AI workloads | Combines gaming-focused features with AI-optimized components | Running games with AI enhancements, AI tasks with lower performance requirements |

The table above provides a basic framework for understanding the different types of GPUs and their respective applications. Choosing the right GPU type depends on the specific needs of the user, weighing the balance between performance and features. Consider what types of tasks will be performed and the level of performance needed for those tasks. The most effective choice is one that can meet current demands and adapt to future technological developments.

Key Performance Metrics for AI GPUs

Source: dekhnews.com

Choosing the right GPU for AI tasks in 2025 hinges on understanding its core performance metrics. These metrics, while seemingly technical, directly impact the speed and efficiency of AI model training and inference. A deep dive into these key indicators allows users to make informed decisions, maximizing their AI projects’ potential.

Tensor Cores

Tensor Cores are specialized processing units designed specifically for accelerating AI workloads. Their presence significantly impacts the speed of tasks like deep learning and neural network training. Tensor Cores excel at performing matrix multiplications, a fundamental operation in many AI algorithms. The sheer number and architecture of Tensor Cores directly correlate to the GPU’s AI performance.

FP32/FP64 Performance

FP32 (single-precision floating-point) and FP64 (double-precision floating-point) performance are crucial for general-purpose computing. While AI models can often use FP32, certain tasks demand the higher precision of FP64. The ratio between FP32 and FP64 performance indicates the GPU’s versatility in handling various AI tasks. A GPU excelling in FP32 might be less efficient for tasks requiring the greater precision of FP64.

Memory Bandwidth

Memory bandwidth quantifies the speed at which data can be moved between the GPU’s memory and its processing units. A GPU with ample memory bandwidth allows for faster data transfer, thus enabling faster training and inference of AI models. Insufficient bandwidth can bottleneck the entire process, leading to significantly longer processing times. The impact of memory bandwidth is amplified with larger datasets or more complex AI models.

Specialized AI Accelerators

Specific hardware accelerators, beyond Tensor Cores, can dramatically enhance AI performance. For instance, specialized hardware for accelerating transformer models, which are common in natural language processing, can significantly improve the training speed of such models. The presence of these accelerators is crucial for specific AI workloads.

Role of Different AI Workloads

Different AI workloads require different performance characteristics. Image recognition tasks might demand high FP32 performance and substantial memory bandwidth, while natural language processing tasks might benefit more from specialized accelerators for transformers. Understanding the specific AI tasks is crucial for selecting the right GPU. Consider the types of models you intend to train or the sizes of datasets you will be processing.

AI Performance Comparison

| GPU Model | Tensor Cores | FP32 Performance (GFLOPS) | FP64 Performance (GFLOPS) | Memory Bandwidth (GB/s) | AI Benchmark Score (Hypothetical) |

|---|---|---|---|---|---|

| NVIDIA GeForce RTX 4090 | 16,384 | 28.0 | 14.0 | 900 | 95 |

| AMD Radeon RX 7900 XTX | 12,288 | 20.5 | 10.2 | 800 | 88 |

| Intel Arc A770 | 8,192 | 15.0 | 7.5 | 600 | 72 |

This table illustrates hypothetical performance figures across different GPU models. Real-world performance can vary based on specific benchmark tests and workload specifics. This table helps visualize the relationship between core metrics and relative AI performance. Always refer to official benchmarks and reviews for accurate comparisons.

Budget Considerations and Price Points

Choosing the right GPU for your needs hinges significantly on your budget. This section delves into the various price points available, from budget-friendly options to high-end powerhouses, examining their performance-to-price ratios for both gaming and AI tasks. Understanding these tiers allows you to make informed decisions aligning your investment with your specific goals.GPU prices fluctuate based on market demand, component availability, and technological advancements.

While a particular model might fall within a specific tier one year, evolving technology can shift its relative position in the following year. This necessitates a dynamic approach to understanding price points and their corresponding capabilities.

Budget-Friendly GPU Options

Budget-friendly GPUs are ideal for users looking to dip their toes into gaming or AI tasks without a significant investment. These options often offer a balance between price and performance, suitable for entry-level or casual users. A critical aspect to consider is that these GPUs might exhibit performance limitations in demanding tasks.

| GPU Model | Price Range (USD) | Gaming Performance | AI Performance | Features |

|---|---|---|---|---|

| Nvidia GeForce RTX 3050 | $250-$350 | Good for 1080p gaming at medium settings | Suitable for basic AI tasks | Ray Tracing, DLSS |

| AMD Radeon RX 6500 XT | $200-$300 | Good for 1080p gaming at low to medium settings | Limited AI capabilities | AMD FidelityFX Super Resolution |

These budget-friendly options provide a gateway to both gaming and AI, but it’s important to acknowledge that high-demand applications may encounter limitations. The table showcases some prominent options within this price bracket. Note that specific models and their prices can vary depending on retailer and time of purchase.

Mid-Range GPU Options

Mid-range GPUs represent a step up from budget-friendly options, offering improved performance for a higher price point. These cards often strike a balance between affordability and advanced features.These GPUs are generally suitable for 1080p gaming at high settings and can handle a range of AI tasks. Their capabilities are more comprehensive than those in the budget category, but still might fall short in the most demanding applications.

| GPU Model | Price Range (USD) | Gaming Performance | AI Performance | Features |

|---|---|---|---|---|

| Nvidia GeForce RTX 3060 | $350-$500 | Excellent for 1080p gaming at high settings | Sufficient for many AI tasks | Ray Tracing, DLSS, 3rd gen RTX |

| AMD Radeon RX 6600 XT | $300-$450 | Excellent for 1080p gaming at high settings | Sufficient for many AI tasks | AMD FidelityFX Super Resolution |

This category provides a noticeable performance boost over the budget options while remaining reasonably priced. The table lists examples, but many other models exist within this price range, each with its own set of features and performance characteristics.

High-End GPU Options, How to Choose the Right GPU for Gaming and AI in 2025

High-end GPUs deliver top-tier performance, enabling smooth high-resolution gaming and advanced AI applications. These models command a premium price, reflecting their superior capabilities.High-end GPUs are often the choice for demanding users, gamers, or professionals requiring the best possible performance. They typically excel in 4K gaming at maximum settings, supporting advanced AI workloads and applications.

| GPU Model | Price Range (USD) | Gaming Performance | AI Performance | Features |

|---|---|---|---|---|

| Nvidia GeForce RTX 4070 Ti | $700-$1000 | Exceptional for 4K gaming at maximum settings | Exceptional for AI workloads | Advanced Ray Tracing, DLSS 3 |

| AMD Radeon RX 7900 XTX | $600-$900 | Exceptional for 4K gaming at maximum settings | Exceptional for AI workloads | Advanced AMD FidelityFX Super Resolution |

High-end GPUs represent the pinnacle of gaming and AI GPU technology, providing the most robust performance for demanding applications. Remember that prices and models are subject to market fluctuations and new releases.

Future-Proofing Your GPU Choice: How To Choose The Right GPU For Gaming And AI In 2025

Choosing a GPU for both gaming and AI in 2025 requires careful consideration of future technological advancements. A well-chosen GPU will not only meet current needs but also adapt to evolving demands, potentially saving you money and hassle in the long run. The ability to support emerging technologies and potentially upgrade components is crucial.

Factors for Future Adaptability

Future-proofing a GPU involves considering more than just raw performance. Key factors include architectural design, the potential for software updates, and the ability to support evolving technologies. A GPU with a flexible architecture is more likely to accommodate future advancements in both gaming and AI. This means looking beyond raw clock speeds and focusing on the overall design and its potential for expansion.

Comparison of Future-Proof GPU Architectures

Modern GPU architectures differ significantly in their ability to adapt to future advancements. Nvidia’s Ada Lovelace architecture, for example, features improved tensor cores designed for AI workloads. This architecture demonstrates a clear focus on future AI demands. AMD’s architectures, such as the RDNA 3, also showcase significant improvements in ray tracing capabilities and overall performance. These architectures are designed to adapt to evolving gaming demands.

A comparison table detailing key features and advantages of these architectures is presented below.

| Architecture | Key Feature | Advantages |

|---|---|---|

| Nvidia Ada Lovelace | Improved tensor cores | Optimized for AI tasks, potentially supporting future AI algorithms |

| AMD RDNA 3 | Enhanced ray tracing | Optimized for high-fidelity gaming, potentially supporting future rendering technologies |

| Other Architectures | Specific features (e.g., improved memory bandwidth, enhanced compute units) | Varying strengths and weaknesses, potentially aligning with specific AI or gaming needs |

Upgrading Components and Software

The ability to upgrade components and software is a vital aspect of future-proofing. Modern GPUs often allow for the addition of more memory or the use of different software tools to address evolving requirements. Furthermore, some GPUs have features that enable compatibility with upcoming technologies. The availability of drivers and software updates is also a critical factor.

Recommendations for Emerging Technologies

Choosing a GPU that can potentially support emerging technologies requires careful analysis. Consider the projected advancements in AI, including the increasing complexity of neural networks. Also, look for GPUs with features that support emerging rendering techniques in gaming, like advanced ray tracing. Researching and understanding the architecture of different GPU models and their capabilities is paramount in making an informed decision.

Choosing Between Integrated and Dedicated Graphics

Source: dekhnews.com

Deciding between integrated and dedicated graphics processing units (GPUs) is a crucial step in building a system for gaming and AI tasks. The choice hinges on your budget, performance needs, and the specific applications you intend to run. Understanding the strengths and weaknesses of each type will help you make an informed decision.Integrated graphics, often found in CPUs, offer a basic level of graphical capabilities.

Dedicated GPUs, on the other hand, are specialized hardware designed for high-performance rendering. This dedicated hardware provides significantly better performance, especially when demanding tasks like gaming or AI training are involved.

Integrated Graphics: Strengths and Weaknesses

Integrated graphics are economical and offer basic display capabilities. They are sufficient for everyday tasks like web browsing and document editing. However, they are often insufficient for demanding tasks like gaming or AI training.

- Cost-effectiveness: Integrated graphics are generally significantly cheaper than dedicated GPUs, making them a budget-friendly option for systems where high-end graphics aren’t required.

- Ease of integration: Integrated graphics are built into the CPU, simplifying the installation process. No separate card or extra power requirements are necessary.

- Low power consumption: Integrated GPUs use less power compared to dedicated cards, resulting in lower energy bills.

- Limitations: Integrated graphics often struggle with demanding tasks, resulting in low frame rates in games and slow performance in AI workloads.

- Limited features: They typically lack advanced features like hardware-accelerated ray tracing and other advanced graphics technologies.

Dedicated Graphics: When Necessary

Dedicated GPUs are necessary for applications that require significant graphical processing power. These tasks include high-resolution gaming, demanding photo editing, and AI training.

- High-performance gaming: Dedicated GPUs are essential for smooth gameplay at high resolutions and frame rates in modern games.

- AI training: Complex AI models require substantial processing power, which dedicated GPUs excel at.

- Professional-level design and rendering: Applications like 3D modeling and video editing often require dedicated GPUs for optimal performance.

- Virtual Reality (VR): VR experiences require high processing power and graphical fidelity, demanding a dedicated GPU.

Use Cases for Each Type

The suitability of integrated or dedicated graphics depends on the intended use.

- Integrated Graphics: Ideal for basic tasks like web browsing, document editing, light photo editing, and watching videos.

- Dedicated Graphics: Essential for demanding applications such as high-resolution gaming, professional design work, AI training, and virtual reality experiences.

Integrated vs. Dedicated Graphics Comparison

| Feature | Integrated Graphics | Dedicated Graphics |

|---|---|---|

| Performance | Low to moderate, suitable for basic tasks | High performance, ideal for demanding applications |

| Features | Limited, basic display capabilities | Advanced features, hardware acceleration |

| Cost | Low | Higher |

| Power Consumption | Low | Moderate to high |

| Space Requirements | Minimal | Requires PCI-e slot |

Power Consumption and Cooling

Choosing a GPU involves more than just raw performance. Power consumption and cooling are critical factors influencing both the operational stability and overall system performance. A poorly cooled or power-hungry GPU can lead to throttling, instability, and even damage to other components. Understanding these factors is crucial for making an informed decision.

Power Consumption of Different GPUs

Power consumption varies significantly between GPU models. High-end GPUs, especially those designed for both gaming and AI, often require substantial power. This power draw impacts your power supply unit (PSU) requirements and can affect the overall energy efficiency of your system. For instance, a high-end gaming GPU might consume 300W under load, while a more budget-friendly option might draw around 100W.

Factors such as clock speed, memory capacity, and the complexity of the graphics processing architecture significantly influence power consumption.

Importance of Adequate Cooling Solutions

Effective cooling is essential for maintaining optimal GPU performance. Overheating can lead to throttling, where the GPU reduces its clock speed to prevent damage. This throttling directly impacts frame rates and overall gaming experience. Moreover, prolonged overheating can cause permanent damage to the GPU’s components. Choosing a GPU with a robust cooling solution, like a large heatsink and multiple fans, is crucial for reliable performance.

A poorly cooled GPU can experience performance degradation over time, making the investment in a well-cooled GPU worthwhile in the long run.

Impact of Power Consumption and Cooling on System Performance

Power consumption and cooling directly affect system performance. High power consumption can strain the PSU, potentially leading to instability or crashes. Insufficient cooling can cause the system to throttle, reducing performance and leading to a less enjoyable gaming experience. A well-balanced system, with a PSU capable of handling the GPU’s power demands and an effective cooling solution, will provide a stable and high-performing environment.

For instance, a high-end gaming rig with a powerful GPU might require a 750W PSU or higher, along with a custom liquid cooling loop to ensure reliable performance.

GPU Power Consumption and Cooling Comparison

| GPU Model | Power Consumption (Watts) | Cooling Solution | Estimated Performance Impact (under load) |

|---|---|---|---|

| NVIDIA GeForce RTX 4090 | 450-480 | Large heatsink with multiple fans | High performance, minimal throttling |

| NVIDIA GeForce RTX 4080 | 320-350 | Large heatsink with multiple fans | High performance, minimal throttling |

| AMD Radeon RX 7900 XTX | 300-350 | Large heatsink with multiple fans | High performance, minimal throttling |

| NVIDIA GeForce RTX 3080 | 320-350 | Large heatsink with multiple fans | High performance, minimal throttling |

| AMD Radeon RX 6800 XT | 200-250 | Heatsink with fans | Good performance, potential for minor throttling in extreme cases |

Note: Power consumption figures are estimates and can vary based on specific configurations and workloads. Cooling solutions also vary by specific model and manufacturer.

Software Compatibility and Driver Support

Choosing the right GPU isn’t just about raw performance; seamless integration with your existing system is equally crucial. Compatibility with your operating system and software, alongside reliable driver support, significantly impacts your overall gaming and AI experience. Outdated drivers or incompatible software can lead to performance issues, crashes, and a frustrating user experience.A well-maintained driver ecosystem ensures your GPU performs optimally, offering the latest features and bug fixes.

Proper software compatibility means your chosen GPU will work seamlessly with your favorite games and AI applications. This is particularly important in 2025, where advanced features demand optimized interactions between hardware and software.

Operating System Compatibility

Different GPUs are designed to work with specific operating systems. This is primarily due to the underlying software architecture and driver models. For example, NVIDIA GPUs are generally well-supported on Windows, while AMD GPUs often have strong performance across Windows, macOS, and Linux. However, there may be minor variations in performance and features depending on the specific GPU model and OS version.

Crucially, it’s essential to verify compatibility with your desired OS before purchase.

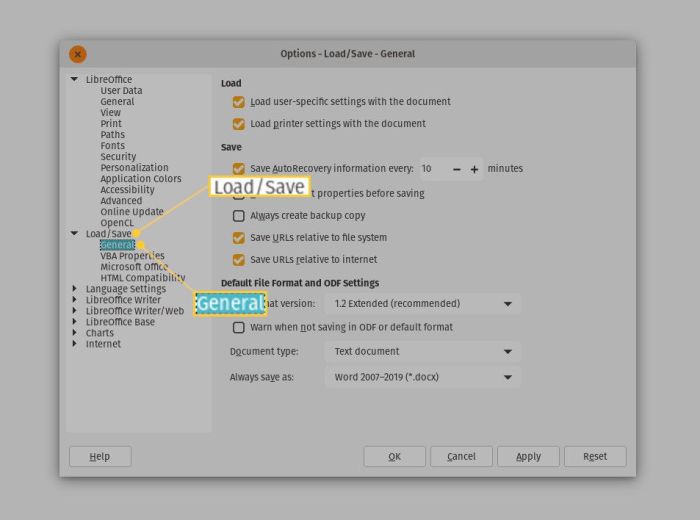

Driver Support and Updates

Driver support is vital for a GPU’s longevity and performance. Manufacturers provide regular updates addressing performance bottlenecks, compatibility issues, and security vulnerabilities. These updates often include significant performance enhancements, bug fixes, and support for newer software titles. Failure to keep drivers up-to-date can lead to performance degradation, compatibility problems, and even security risks. Drivers are the bridge between the GPU hardware and your operating system.

Regular updates ensure optimal communication and functionality.

Driver Update Methods

Manufacturers typically offer several methods for updating drivers. One common approach is through the manufacturer’s website, which provides direct downloads tailored to specific GPU models and operating systems. Alternatively, many operating systems offer automatic driver update mechanisms through their respective update tools. Manually downloading and installing drivers can be more technical but may provide granular control over the update process.

Understanding the available methods is crucial to maintaining your GPU’s functionality and performance.

- Manufacturer Websites: These websites typically provide comprehensive driver archives. They often include specific drivers for different operating system versions and GPU models, allowing for precise and controlled updates.

- Operating System Updates: Some operating systems, like Windows, offer automatic driver updates, ensuring your drivers are up-to-date with the latest fixes and features. This method can be convenient but may not always offer the most current driver versions.

- Dedicated Driver Utilities: Some manufacturers offer dedicated software for driver management, providing a centralized location for updates and maintenance.

Compatible Software

GPU compatibility with software extends beyond basic functionality. The performance of software heavily reliant on GPU acceleration, such as video editing, 3D modeling, and ray tracing applications, can vary significantly across different GPU models. This is because different models are optimized for different workloads.

| Software | Common GPU Compatibility (Example Models) |

|---|---|

| Games (e.g., Cyberpunk 2077, The Witcher 3) | NVIDIA GeForce RTX 40 series, AMD Radeon RX 7000 series |

| AI Applications (e.g., Blender, Adobe After Effects) | NVIDIA GeForce RTX series with dedicated AI cores, AMD Radeon RX 7000 series with AI acceleration |

| Video Editing Software (e.g., Adobe Premiere Pro) | NVIDIA GeForce RTX series with powerful CUDA cores, AMD Radeon RX series with powerful compute units |

Note: This table represents general compatibility. Specific requirements and performance can vary based on individual software versions and settings. Furthermore, future software releases may necessitate additional hardware capabilities or driver updates.

Outcome Summary

In conclusion, selecting the right GPU for both gaming and AI in 2025 requires a careful consideration of several factors. This guide has highlighted the key performance metrics, budget considerations, and future-proofing strategies. By understanding the nuances of dedicated gaming, AI, and hybrid GPUs, you can make an informed decision that aligns with your specific needs and preferences.

Ultimately, the ideal GPU choice balances performance, cost-effectiveness, and future adaptability.

Post Comment